New Blog and the Technology that powers it

As you may have noticed, I’ve migrated my blog from Tumblr to a shiny Ghost powered engine. While it does feel like I’m leaving the social media buzzword bingo revolution behind, editing on Tumblr wasn’t exactly made with lengthy InfoSec posts in mind.

Of course, moving to Ghost in my case means provisioning and managing a new server, so I wanted to take some time to document the architecture of my solution incase anyone else is looking to make a similar change in the future.

Hosting

My hosting provider of choice is DigitalOcean, which I have used for a few years for some of my personal servers. To begin with, I’ve selected a low-end VPS, with the intention of reviewing usage over the coming months to see if this will be sufficient to run Ghost.

If you are thinking of using DigitalOcean, please consider using my referral code: https://m.do.co/c/2279fc91b612, which gets you $10 in credit (and also gives me some pennies to continue hosting this blog ;).

Docker

I am a fan of containerisation, so I decided to use Docker to host the components needed for Ghost. The images that I’ve used are:

Keeping functionality within a container also helps with the backup and restore of the server when the worst does happens.

The ghost container can be run with the following command:

docker run -v $BLOGROOT/ghost:/var/lib/ghost \

--name exampleblog \

--expose=2368 \

-e "NODE_ENV=production" \

-d ghostBy using a volume, any files added to Ghost are available for backup from the host.

To expose this service to the world, I’ve used nginx as a reverse proxy, which can be run with the following parameters:

docker run -v $BLOGROOT/nginx/conf.d:/etc/nginx/conf.d \

-v $BLOGROOT/nginx/logs/:/logs/ \

-v $BLOGROOT/nginx/html:/var/www/html \

-v $BLOGROOT/nginx/letsencrypt:/etc/letsencrypt \

-it --name exampleblog-nginx \

-p 80:80 \

-p 443:443 \

--link exampleblog \

-d nginxAgain, volumes are used to allow logs to be available to the host for review and backup, and also allows the configuration file to be passed from the host.

Something that I’ve never used much before is the “–link” option, which in this case, makes the ghost container available to the nginx container, and writes a new /etc/hosts entry with the IP, meaning we can refer to the ghost HTTP service via “http://exampleblog:2368/“.

For Let’s Encrypt, I use another container which is run via a cron-job on a monthly basis. As we have our nginx webroot and configuration exposed to the host, we can use the running nginx container to host our required Let’s Encrypt verification files, by starting the docker image with the following:

docker run -it --rm --name letsencrypt \

-v "$BLOGROOT/nginx/letsencrypt:/etc/letsencrypt" \

-v "/var/lib/letsencrypt:/var/lib/letsencrypt" \

-v "$BLOGROOT/nginx/html:/var/www/html" \

quay.io/letsencrypt/letsencrypt certonly \

--webroot \

--webroot-path /var/www/html \

--agree-tos \

--renew-by-default \

-d blog.xpnsec.com \

-m xpnsec[at]protonmail.comThe beauty of this type of architecture is that to recover from a disaster, all that is needed is a backup of the files shared from the host, and the above commands to spin up your docker containers… well, that’s the idea :)

Hardening

During a server build, I use Ansible to deploy a Ubuntu hardening script, which takes care of:

- Installing updates

- Configuring iptables rules and iptables-persistent

- Tightening up sysctl settings

- Disabling IPv6

- Installing and configuring FIM and HIDS

- Adding log monitoring

- Updating SSHd configuration

I’m hoping to release the Ansible role in the near future once it’s ready for release, however if you are looking for something in the meantime, there are publicly available configurations to take inspiration from.

Backup

At first, I considered using DigitalOcean’s backup service, however, adding 20% to my running server cost isn’t something I could justify for such a small volume of data. Instead, I opted for S3 which will allow me to ship my backups to Amazon for a few cents a month.

To help provide some redundancy for when the worst does happen, I utilise S3 Versioning, which allows me to overwrite the same backup and keep a history of changes. On top of this, I use S3’s Lifecycle functionality to ensure that my backups are cleaned up every 90 days.

To provide protection against any backups being wiped, either through mistake or malice, a new policy was created containing the following access to the backup bucket:

"Action": [

"s3:PutObject",

"s3:GetObject",

"s3:GetObject",

"s3:GetObjectVersion"

],This will allow the pushing of data to my S3 backup bucket, however denies the ability to delete the data once pushed. For example:

user@vypr-blog:/home/user$ aws s3 cp /tmp/test.txt s3://xpnexample-backup/blog.xpnsec.com/test.txt

upload: ../../tmp/test.txt to s3://xpnexample-backup/blog.xpnsec.com/test.txt

user@vypr-blog:/home/user$ aws s3 rm s3://xpnexample-backup/blog.xpnsec.com/test.txt

delete failed: s3://xpnexample-backup/blog.xpnsec.com/test.txt An error occurred (AccessDenied) when calling the DeleteObject operation: Access DeniedMonitoringß

To help monitor the accessibility of the server and blog, and alert to any strange behaviour, I have used a combination of tools.

The first tool that I use for notification is ntfy, which supports Pushover. Being a massive Apple fanboy, Pushover works on all of my iOS and Apple Watch devices, meaning that I can receive alerts when needed.

Next is good old grep and bash, which monitors the status of the server, and logs for anything which may resemble an issue, firing an alert via ntfy if detected.

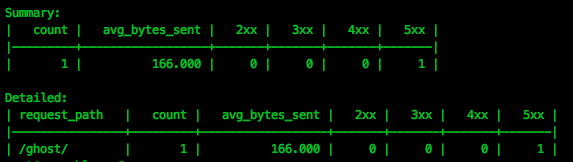

One interesting tool I did come across which is worth a mention, is ngxtop, which provides the ability to query nginx logs for interesting data, for example, showing pages which result in a HTTP 500:

Improvementsß

I always try to design any of my servers as though they will be hacked/burned/stolen/sold at some point in the near future, so whilst some of this design may seem overkill, by planning for that inevitable moment, I can hopefully save myself some valuable time in getting services back up and running.

To quote Jack:

That being said, if you can offer any improvements, or spot any design mistakes, please let me know via the usual methods :)

Hopefully this is of some use to anyone looking to make the leap over to a hosted Ghost blog.