Bring Your Own VM - Mac Edition

For a while I’ve wanted to explore the concept of leveraging a virtual machine on target during an engagement. The thought of having implant logic self-contained and running under a different OS to the base seems pretty interesting. But more so, I’ve been curious as to just how far traditional AV and EDR can go to detect malicious activity when running from a different virtual environment. While this is a nice idea, the issues with creating this type of malware are obvious, with increased complexity, size and compatibility issues limiting what is viable against a target. While some real-world attackers have taken this to a clumsy concept (see Ragnar and Maze malware), deploying a full VirtualBox solution with Windows XP or Windows 7 on target isn’t exactly ideal.

With the introduction of Big Sur came the Virtualization framework, which alleviated a number of previous limitations, meaning that with a supported framework now provided on macOS 11, it’s a good time to explore this concept. So in this post we will walk through how to spin up a small VM by leveraging the Virtualization framework, look at just how this acts for us as attackers, and hopefully give defenders a point of reference when looking to protect their Apple customers.

Virtualization.framework

With Big Sur came a framework which I initially didn’t pay too much attention to until I saw a tweet by @KhaosT unveiling his SimpleVM project. Taking a look at the code I was sure I was missing something, everything was so concise and clean unlike my previous exploration of VM hypervisors. But within a very short space of time it was possible to get a POC up and running, showing just how intuitive this framework was to work with:

Playing around with Apple's Virtualization framework in Swift on M1. Cool to create an small ARM64 Linux VM complete with NAT networking… Wonder how far down the rabbit hole EDR/AV vendors will go ;) pic.twitter.com/QqNYyzBYon

— Adam Chester (@xpn) November 26, 2020

So what is the Virtualization framework? Well for those of us who have been developing on Mac you may know its lower-level counterpart… the Hypervisor framework, which aims to expose hypervisor functions to user-mode applications via a set of API’s. This technology gave birth to xhyve which is a neat project later adopted by Docker who moulded it to build their Linux VM which underpins Docker on Intel Macs.

Speaking of Docker, it turns out that the Docker has also adopted the Virtualization framework on M1 Macs for its Linux host. For example, anyone who is running the latest M1 preview of Docker can find themselves interacting with a Linux VM by connecting to the exposed Unix socket:

socat - UNIX-CONNECT:$HOME/Library/Containers/com.docker.docker/Data/vms/0/console.sockThe key difference between the Hypervisor and Virtualization frameworks is that the latter acts as an abstraction layer and sits above the Hypervisor framework, exposing a simple set of APIs which allow us to provide a number of configuration options and device settings to spin up a VM quickly. While in its current form it is quite limited (for example I don’t currently see tooling to list or manage any running virtual machines), with support for Linux out of the box we now have a very simple and supported way to create our VM programatically. Let’s start by taking a look at just how simple it is to create a new VM.

Building a VM with Virtualization.framework

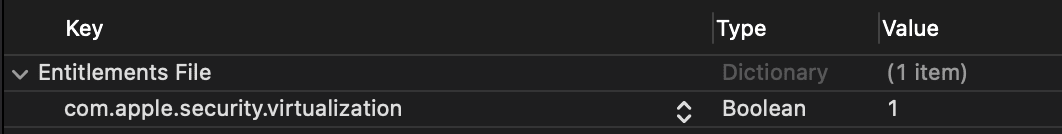

To build our POC we’ll use Swift. The first thing we need to do is set up a few pre-reqs, mainly adding the com.apple.security.virtualization entitlement required to interact with the framework:

Next we need to create a bootloader which is exposed via a VZLinuxBootLoader object. In this example we will look to boot a compiled aarch64 kernel along with a ramdisk which will house a very small set of essential tools:

let bootloader = VZLinuxBootLoader(kernelURL: URL.init(fileURLWithPath:"/tmp/kernel"))

bootloader.initialRamdiskURL = URL.init(fileURLWithPath:"/tmp/ramdisk"))

bootloader.commandLine = "console=hvc0"Following this we want to create a network device to expose our VM to the outside world. Apple supports two configurations, a NAT network device and a Bridge network device. Unfortunately in true Apple style the Bridge device is walled behind entitlements which require you to grovel to your local Apple representative and justify your case, so let’s stick with NAT for now and assign a random MAC address:

let networkDevice = VZVirtioNetworkDeviceConfiguration()

networkDevice.attachment = VZNATNetworkDeviceAttachment()

networkDevice.macAddress = VZMACAddress.randomLocallyAdministered()We can also add in a virtio serial connection so we can interact with the VM during our debugging, although you may want to omit this depending on your needs:

let serialPort = VZVirtioConsoleDeviceSerialPortConfiguration()

serialPort.attachment = VZFileHandleSerialPortAttachment(

fileHandleForReading: FileHandle.standardOutput,

fileHandleForWriting: FileHandle.standardInput

)With our devices created, we next need to create our Virtual Machine configuration which will associate our created devices with our soon to be created VM, along with the number of CPU cores and any memory allocation we require:

// Configuration of devices for this VM

let config = VZVirtualMachineConfiguration()

config.bootLoader = bootloader

config.serialPorts = [serialPort]

config.networkDevices = [networkDevice]

// Set our CPU core count

config.cpuCount = 2

config.memorySize = 2 * 1024 * 1024 * 1024Once created we then validate our configuration:

try config.validate()We create our VM from the configuration:

self.virtualMachine = VZVirtualMachine(configuration: config)And start up the VM:

self.virtualMachine!.start { result in

switch result {

case .success:

break

case .failure( _):

return

}

}Other than some minor scaffolding and kicking off a runloop, that’s pretty much all there is to it. Hopefully now you see why I was so excited to see this in action, and why this technology offers a number of advantages to us as attackers when targeting macOS endpoints.

M1 or x64

With the launch of Apple’s M1 chip, we now have 2 architectures to consider when dropping an implant on an endpoint. Thankfully with Big Sur comes the Universal Binary Format, which means that we can bundle both ARM64 and x64 code into one binary, delegating the selection preference to the OS depending on the underlying architecture of the hardware.

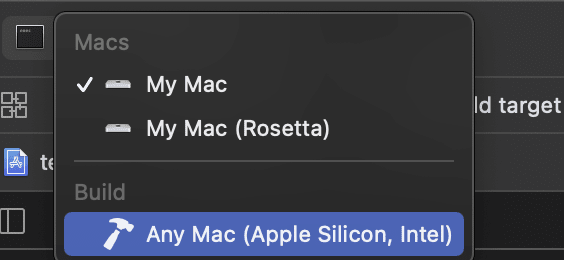

As we are building our project in Swift using XCode, all of the heavy lifting of compiling for multiple architectures is done for us. All we need to do is ensure that our project is targeted for both x64 and ARM64, which we do via the following option:

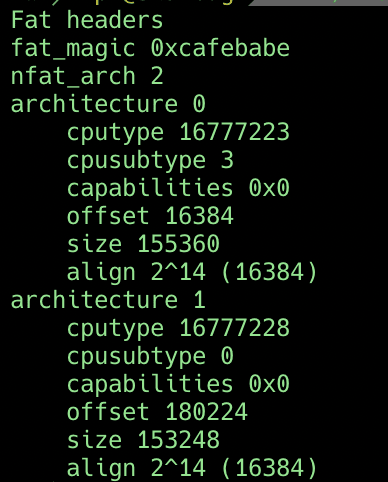

Once compiled, if we run file or otool -f, we can see that our binary now has both an x64 and ARM variant:

Also with the Virtualization framework being provided by the OS on both x64 and ARM64, and with no assembly currently required to spin up the hypervisor, it is portable to whichever architecture you happen to land on.

Now all that remains is to actually start something of use within the VM.

Building our Linux Environment

We have seen just how easy it is to create our VM, now we need to actually build an environment we can use. I’m not going to talk about building Linux kernels too much here as there are plenty of resources out there which will do this with much more familiarity than I could bring, but let’s focus on ARM64 cross-compilation for this stage which, for those without an M1 machine available, will be a bit of a pain.

I’ve found in this situation that Docker is your cross-compilation friend, so let’s create a very simple Dockerfile to house our compilation tools, dependencies, and the two repos that we will be working with:

FROM ubuntu:xenial

# Install dependencies

RUN apt-get update \\

&& apt-get -y -q upgrade \\

&& apt-get -y -q install \\

bc \\

binutils-aarch64-linux-gnu \\

build-essential \\

ccache \\

gcc-aarch64-linux-gnu \\

cpp-aarch64-linux-gnu \\

g++-aarch64-linux-gnu \\

git \\

libncurses-dev \\

libssl-dev \\

u-boot-tools \\

wget \\

xz-utils \\

bison \\

flex \\

file \\

unzip \\

python3 \\

&& apt-get clean

# Install DTC

RUN wget <http://ftp.fr.debian.org/debian/pool/main/d/device-tree-compiler/device-tree-compiler_1.4.0+dfsg-1_amd64.deb> -O /tmp/dtc.deb \\

&& dpkg -i /tmp/dtc.deb \\

&& rm -f /tmp/dtc.deb

# Fetch the kernel

ENV KVER=stable \\

CCACHE_DIR=/ccache \\

SRC_DIR=/usr/src \\

DIST_DIR=/dist \\

LINUX_DIR=/usr/src/linux \\

LINUX_REPO_URL=git://git.kernel.org/pub/scm/linux/kernel/git/stable/linux-stable.git

RUN mkdir -p ${SRC_DIR} ${CCACHE_DIR} ${DIST_DIR} \\

&& cd /usr/src \\

&& git clone --depth 1 ${LINUX_REPO_URL} \\

&& ln -s ${SRC_DIR}/linux-${KVER} ${LINUX_DIR}

WORKDIR ${LINUX_DIR}

# Update git tree

RUN git fetch --tags

# Add buildroot

RUN cd /opt \\

&& wget <https://buildroot.org/downloads/buildroot-2020.02.8.tar.gz> \\

&& tar -zxf /opt/buildroot-2020.02.8.tar.gz \\

&& rm /opt/buildroot-2020.02.8.tar.gzOnce we have built our Docker image, we can cross-compile an ARM64 Linux kernel using something like:

docker run -it -v $(pwd)/cache:/ccache -v $(pwd)/build:/build -e ARCH=arm64 -e CROSS_COMPILE="ccache aarch64-linux-gnu-" xpn/docker-kernelbuild /bin/bash -xec 'git fetch --tags && git checkout v5.4.9 && make menuconfig && make -j4 && cp arch/arm64/boot/Image /build/Image-arm64'Figuring out just how much functionality to strip out of the kernel is a trial and error process depending on what you actually want to achieve within your VM vs the size of the kernel. If you just want to get up and running, you can skip the menuconfig and just use a minimal config I found to work for pivoting purposes here.

With our kernel image compiled, we need a filesystem to boot alongside it. Again with our interest being in efficiency and size, we can stick with a small initrd image to bundle essential tools for us such as BusyBox. To build our initrd we will use the buildroot project, which also gives us an option to link against the uclibc library and save some space over traditional glibc.

Again we use our Docker image to cross-compile using:

docker run -it -v $(pwd)/build:/build xpn/docker-kernelbuild /bin/bash -xec 'cd /opt/buildroot-* && make menuconfig && make -j4 && cp output/images/rootfs.cpio.lz4 /build/initrd'If you just want a minimal pre-configured image with BusyBox and Dropbear linked against uclibc, feel free to grab a copy of the config I used for this POC from here.

When both the kernel and initrd are built, we are looking at a file size of ~1.6mb for the initrd and ~4mb for the kernel… still less than “Hello World” compiled in GoLang. There is absolutely no doubt you could shave some further space off those images, but for the purpose of this POC I think ~5mb for a running a Linux VM isn’t so bad.

With everything prepared, all that’s left to do is to fire up our POC and watch the console spring into life:

OK, its pretty cool to see this in action, but let’s finish with running something a bit more realistic to our use case… that of course being a nice Mythic Poseidon implant to which will connect back and give us pivot access into the target environment. Remember that your Poseidon payload needs to be cross-compiled for aarch64 which, given that the Poseidon payload is written in golang is a trivial thing to do with only a few minor modifications to the payload and a GOARCH env var:

The full swift code for the VM POC be found here, and the Dockerfile to build our cross-compilation environment along with sample .config files for building an ARM64 kernel and initrd can be found here.

I’m pretty excited to see where this tech takes us, after all, endpoint security vendors now need to not only consider M1 on Mac, but also VM’s running a different OS on-top of ARM64. Throw in qemu and things get really interesting :)